1 in 4 Social Media Users Say QAnon Conspiracy Theories Are at Least Somewhat Accurate

October 14, 2020 at 6:00 am ET

-

12% of social media users say they have positively engaged with or posted about QAnon material.

-

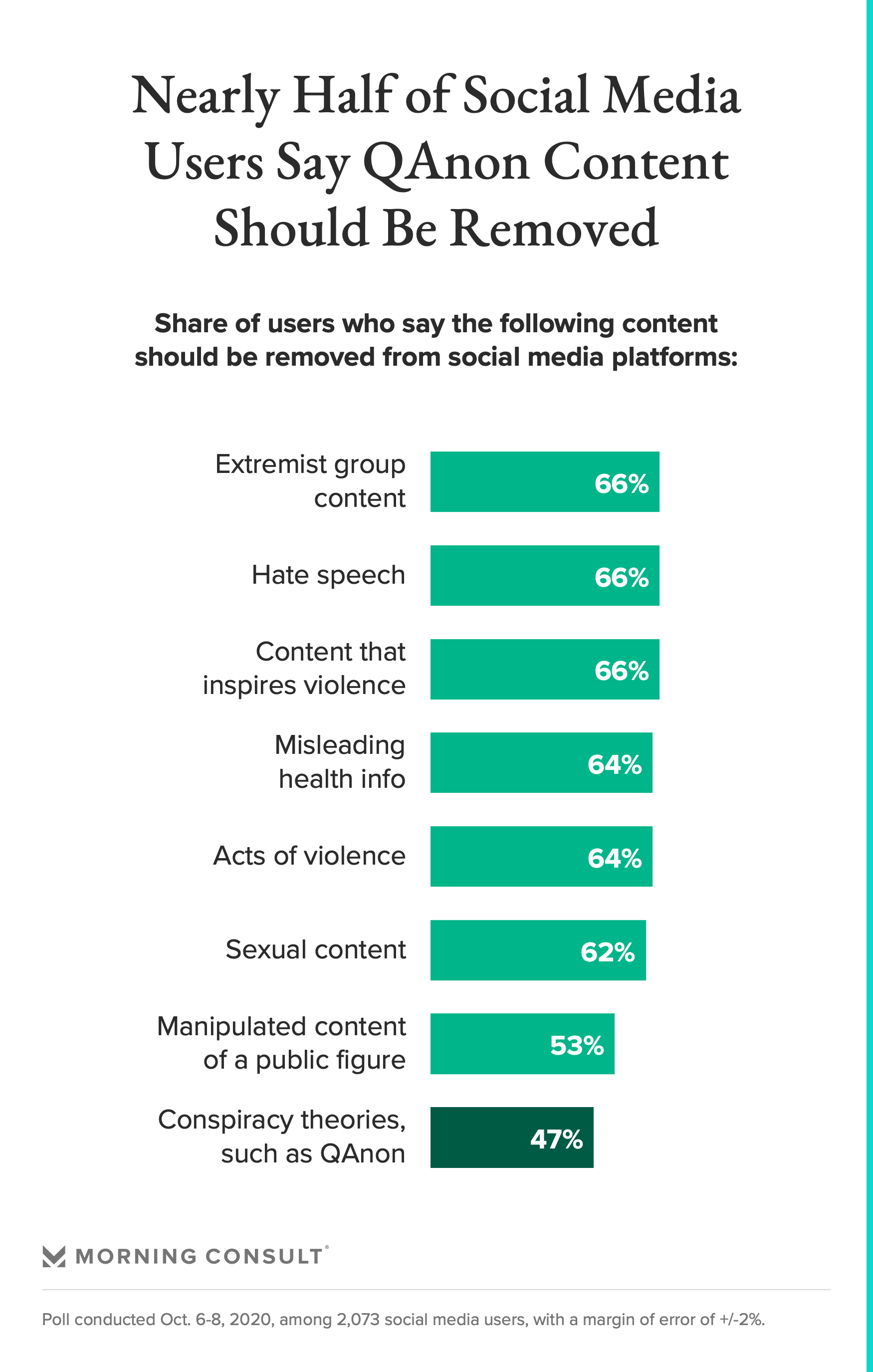

Among various types of objectionable content, conspiracy theories drew the smallest share of social media users (47%) who agreed with that such content should be removed from platforms

As Facebook Inc. and Twitter Inc. clamp down on content tied to the pro-Trump conspiracy theory QAnon, new Morning Consult survey data indicates it won’t be an easy task.

One-quarter of social media users who have heard of QAnon say they believe the group’s conspiracy theories are at least somewhat accurate, while 12 percent said they have either engaged with or posted about QAnon content in a positive way.

The survey, which was conducted Oct. 6-8 among 2,199 U.S. adults, including 2,073 social media users, has a margin of error of 2 percentage points. Out of the wider sample, 1,000 adults say they have heard of QAnon, which has a 3-point margin of error.

QAnon members believe that a group of Democratic politicians and Hollywood moguls are part of an underground cabal that runs a child sex trafficking ring, which President Donald Trump has been secretly fighting — claims that Trump has refused to denounce.

The baseless ideas stem from a series of anonymous 4chan posts by “Q,” a user who claims to be a government official with knowledge of Trump’s work, that began in October 2017 and have since gained a larger following on Facebook, Twitter and other mainstream social media companies in recent months as homebound Internet users spend more time in online forums during the pandemic.

QAnon has also entered the mainstream via the ballot box: Media Matters, a left-leaning nonprofit and media watchdog that has been tracking QAnon’s movement for the past three years, estimates that 26 congressional candidates, mostly Republicans, have either endorsed or showed support for QAnon have secured spots on the November ballots.

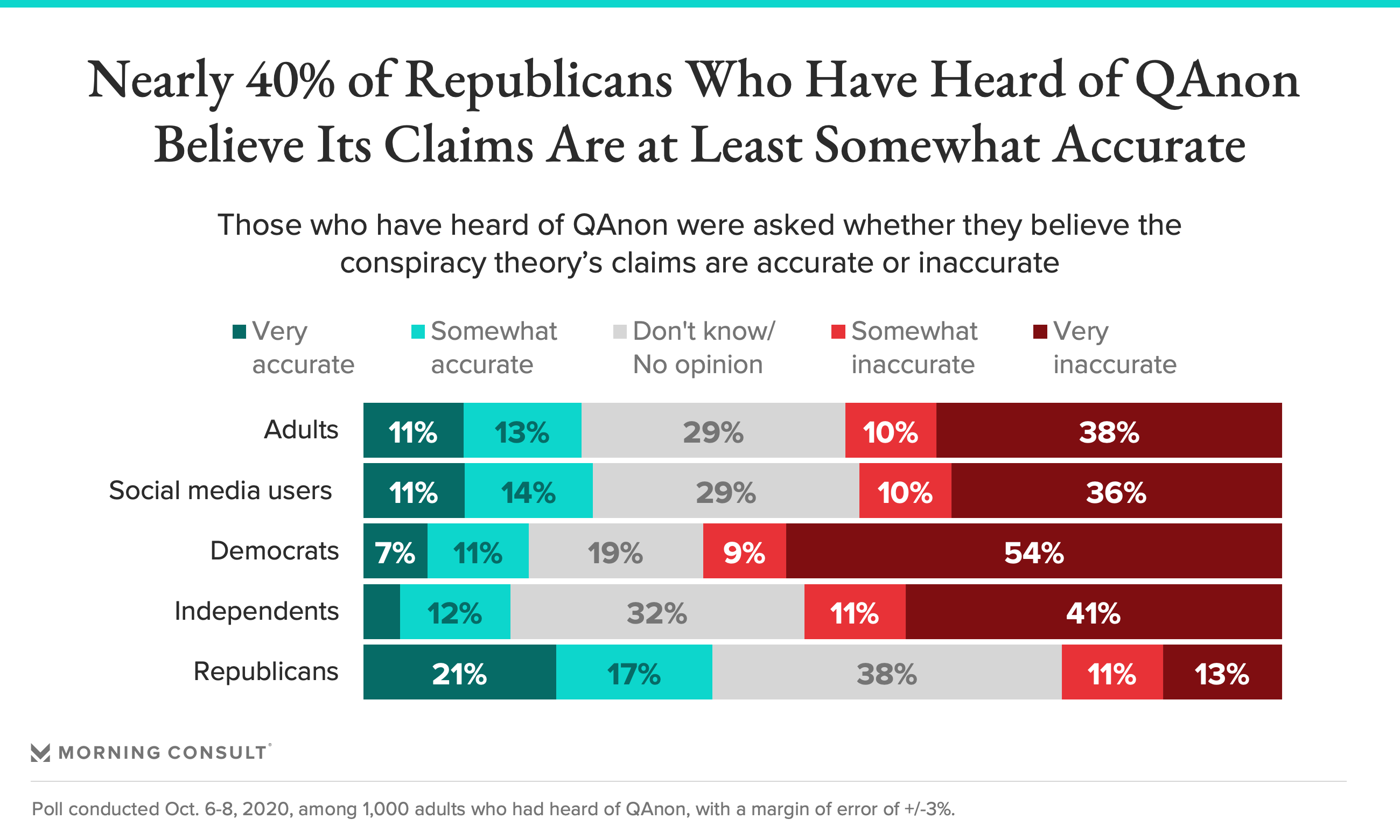

Republicans, in particular, have been drawn to QAnon, with 38 percent of GOP adults who had heard of QAnon saying they believed the claims were at least somewhat accurate, compared to 18 percent of Democrats and 16 percent of independents.

In May 2019, the Federal Bureau of Investigation reportedly flagged QAnon’s ideas as a potential domestic terror threat due to the violent actions its members have taken because of their beliefs, yet many social media companies have only recently moved to stop the proliferation of QAnon content on their sites.

Last week, Facebook said it is banning all QAnon-related Facebook pages and groups, as well as Instagram accounts, which builds on its policy in August that removed only those that promoted violence. And in July, Twitter banned 7,000 QAnon accounts and stopped recommending accounts and content related to the conspiracy.

A Twitter spokesperson said the company has cut impressions on QAnon content in half to date. Facebook did not respond to a request for comment.

Angelo Carusone, the president of Media Matters for America, said the companies face an uphill battle given how quickly the group’s content has spread in the months since coronavirus lockdowns started.

“This April to end of September period of time has been the single most explosive growth of QAnon-affiliated pages, and a big piece of that is Facebook,” said Carusone. Before the ban on QAnon content, membership in Facebook groups tied to the theory had doubled since April as those who already believed in anti-vaccination and anti-government conspiracies migrated into QAnon communities, he said.

Social media users may not be as keen to classify QAnon as a problem. Morning Consult asked about half of these respondents if the spread of conspiracy theories like QAnon on social media is a problem, and 39 percent said it was a “major problem” while another 28 percent termed it a “minor problem.”

The other half of the sample was presented with the same question with no mention of QAnon. Among those social media user respondents, 46 percent said the spread of conspiracy theories through social media was a “major problem” and 32 percent said it was a minor one.

And 47 percent of social media users said that conspiracy theory content, including QAnon ideas, should be removed from the platforms — the lowest share for any other type of content listed in the question. Thirteen percent said the content shouldn’t be removed or receive a warning, the highest share of any other content type tested.

A small but notable group of social media users also said that their friends or family members are engaging with QAnon content: 13 percent said their friends have engaged positively with QAnon content online, and 11 percent said the same about their family members. Those figures were similar no matter the respondent’s political affiliation.

The proliferation of QAnon content since the pandemic lockdowns started has made completely eradicating the group’s online spaces impossible now, Carusone said.

QAnon’s members are nimble, with many of them able to rally around a new hashtag, forum or other online organizing tool much faster than others after their old groups or hashtags have been removed or flagged by social media moderators. For instance, in recent months, the group has started to mobilize around the hashtag #SaveTheChildren as a recruitment tool.

“Everything that happened before April was bad and a concern, but the reason we’re having a conversation about QAnon right now is because it’s never going away,” Carusone said. “The ship has sailed.”

MC/Tech: Subscribe

Get the latest global tech news and analysis delivered to your inbox every morning.

*** This article has been archived for your research. The original version from Morning Consult can be found here ***