The Covid-19 Infodemic — Applying the Epidemiologic Model to Counter Misinformation | NEJM

Throughout the world, including the United States, medical professionals and patients are facing both a pandemic and an infodemic — the first caused by SARS-CoV-2 and the second by misinformation and disinformation. The Annenberg Public Policy Center’s tracking of social and legacy media has found that millions of people have been exposed to deceptive material alleging that SARS-CoV-2 is a hoax or that experts are exaggerating its severity and the extent of its spread, that masks are ineffective or increase infection risk, or that Covid-19 vaccines cause the disease, alter the recipient’s DNA, or include tracking devices. Believing such claims is associated with a lower likelihood of engaging in preventive behavior and a lower willingness to be vaccinated.1

We believe the intertwining spreads of the virus and of misinformation and disinformation require an approach to counteracting deceptions and misconceptions that parallels epidemiologic models by focusing on three elements: real-time surveillance, accurate diagnosis, and rapid response.

First, existing infodemic-surveillance methods could be strengthened to function similarly to coordinated syndromic-surveillance systems. Infodemic-surveillance systems could activate in response to statistical deviations from baseline rates of misinformation or other empirically defined thresholds or markers, such as when the prevalence or placement of misinformation in a known seeding ground suggests the likelihood of contagious spread. Had infodemic monitoring been in place, it might have prevented a “superspreader” event that began on October 12, 2020, when, in a misreading of a Centers for Disease Control and Prevention (CDC) report, The Federalist, a conservative online magazine that is sometimes cited by right-wing radio and cable hosts, reported that “masks and face coverings are not effective in preventing the spread of Covid-19.” Had the misleading article been caught by a dedicated team that quickly engaged possible readers online, Fox News’s Tucker Carlson might not have told his more than 4 million viewers the next evening that 85% of people who were infected with Covid-19 in July 2020 had been wearing a mask. The superspreading escalated when President Donald Trump echoed the same mischaracterization to more than 13 million viewers of a nationally televised October 15 town hall. Had the article in The Federalist or Carlson’s comments been immediately and widely called out, Savannah Guthrie, the town hall moderator, might have been better equipped to counter the inaccurate claim. Instead, she simply asserted, “It didn’t say that. I know that study.”

To halt such misinformation cascades, sensitive surveillance systems need to be triggered at the inflection point of the infodemic curve, before dangerous misinformation goes viral. A finely tuned system would ensure that a response doesn’t occur too early, thereby risking drawing attention to misinformation, or too late, after deceptions and misconceptions have taken hold.

Since lies tend to spread faster than accurate information does and an overwhelming amount of misinformation and disinformation circulates on social media, companies such as Facebook could provide researchers with access to aggregated and deidentified data on the spread of misinformation, as scholars have requested.2 Lack of access to such data is the equivalent of a near-complete blackout of epidemiologic data from disease epicenters.

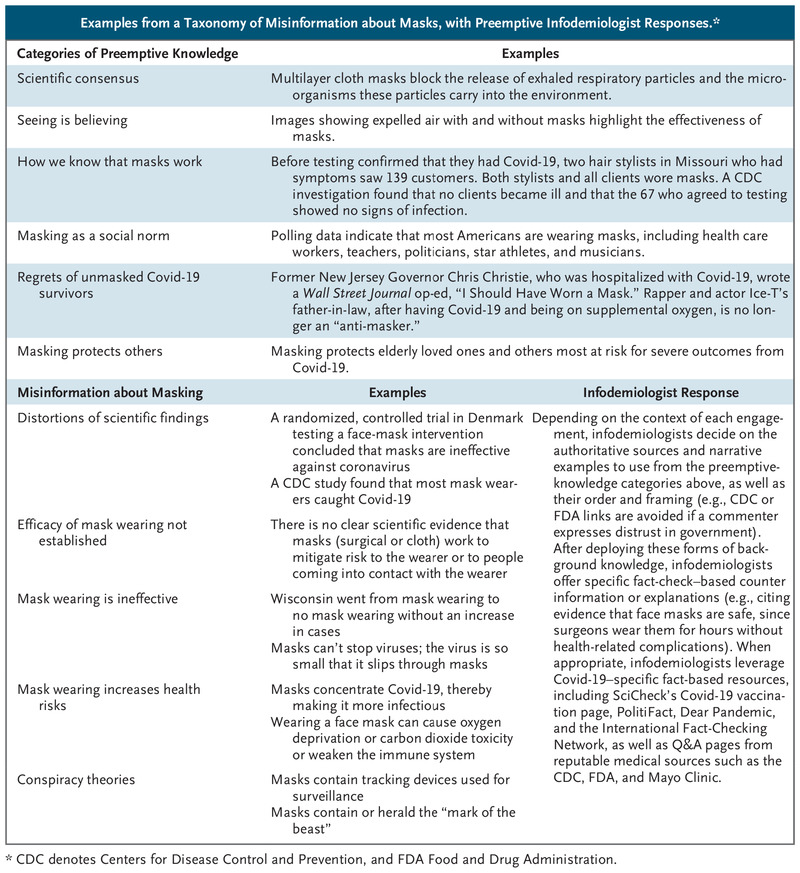

Examples from a Taxonomy of Misinformation about Masks, with Preemptive Infodemiologist Responses.

Examples from a Taxonomy of Misinformation about Masks, with Preemptive Infodemiologist Responses.

Second, just as clinicians bring a classification system to the diagnostic process, scientists seek to answer a set of fundamental questions when they encounter new infectious diseases. The Annenberg Public Policy Center (where one of us works) parses misinformation and deception into categories paralleling these questions: origins, existence and virulence, transmission, diagnosis and tracing, prevention, preventive and treatment interventions, and vaccination. For example, our taxonomy of misinformation related to masking, which is categorized under prevention, encompasses five types of misinformation: distortions of scientific findings, assertions that the effectiveness of masks hasn’t been proven, claims that masks are ineffective, suggestions that masks increase health risks, and conspiracy theories about masks (see table). Knowing the type of misinformation that is circulating allows us to develop strategies for buffering audiences from deceptions or misconceptions and, when necessary, to deploy a rapid-response system to rebut and displace inaccurate claims before they take hold. Studies show that misinformation that isn’t immediately counteracted can be committed to long-term memory.3

Third, in the epidemiologic model, rapid response consists of containment and treatment by medical personnel. So-called infodemiologists — modeled on the CDC’s corps of Epidemic Intelligence Service (EIS) officers — can counteract misinformation in traditional media sources and online using evidence-based methods, including empathetic engagement, motivational interviewing,4 leveraging trusted sources, and pairing rebuttals with alternative explanations.5 Drawing on intelligence gathered from surveillance and identification systems, infodemiologists can inoculate people against dangerous deceptions.

For example, it was predictable that vaccination opponents would misattribute coincidental deaths, such as the death of baseball legend Hank Aaron, to vaccine receipt. An infodemiologist might expose the post hoc ergo propter hoc fallacy at play with a narrative about someone they knew who died just before their scheduled vaccine. Anticipating distrust of government and the health care system in communities of color, an infodemiologist might provide links to articles such as “60 Black health experts urge Black Americans to get vaccinated” in the New York Times or to Eugenia South’s essay in NBC News explaining why, as a Black doctor, she decided to get the Covid-19 vaccine.

Critica (where two of us work) is among the organizations training science-educated infodemiologists to do this work. The primary audience doesn’t include people who deny that Covid-19 exists or are staunchly opposed to vaccination — evidence suggests that people with fixed beliefs aren’t easily persuadable — but rather, people who are susceptible to misinformation and hesitant to be vaccinated. Just as EIS officers collaborate with local experts and communities, infodemiologists should be community-based vaccine champions and partner with specialist societies to promote provaccine messages. Training in effective communication methods minimizes the likelihood of infodemiologists inadvertently increasing vaccine hesitancy. Information goes both ways: these specialists receive surveillance information and recommendations on response strategies while also reporting unusual or prominent types of misinformation circulating in their communities.

How does infodemic surveillance work in practice? Various sources provide the data feeds, including syndromic platforms such as Google’s Coronavirus Search Trends website, Facebook’s CrowdTangle, and other platform-based monitoring tools, as well as social listening and monitoring systems for social and traditional media. Infodemiologists’ on-the-ground reports augment these data streams, much as clinicians who are members of the Program for Monitoring Emerging Diseases (ProMED) share information within the sentinel network. As with syndromic surveillance for infectious diseases, action thresholds can be set empirically. In the case of the CDC report, for example, surveillance would have spotted the mischaracterization in The Federalist. Since research has shown that content from fringe conservative outlets is picked up and amplified by Fox News personalities,2 the system would have triggered a response. A preemptive message quoting the study’s authors reiterating their findings and dismissing the misreading could have been distributed to community-based infodemiologists and fact-checkers, thereby permitting displacement and inoculation to occur before Carlson’s or Trump’s amplification (or preventing the amplification altogether). After hearing Trump repeat the mistaken claim, fact-checkers did disseminate a rebuttal from the study’s authors, but by then, millions of people had been exposed to the misinformation.

Social determinants of health and individual behaviors contribute to community-level variation in infectious disease risk. Similarly, people’s information environment, psychology (e.g., uncertainty avoidance), and information-consumption habits contribute to their susceptibility to questionable content. As a result, the likelihood of acceptance of disinformation and misinformation varies. Our model will be more effective for people intrigued by misinformation but not yet under its thrall than for committed acolytes sequestered in echo chambers. But the model’s strength, like that of epidemiology, is in recognizing that effective prevention and response requires mutually reinforcing interventions at all levels of society, including enhancing social-media algorithmic transparency, bolstering community-level norms, and establishing incentives for healthier media diets.