IPG Report Criticizes Facebook, YouTube for Spreading False Information

- A new report from ad giant IPG criticizes the major social networks like YouTube and Facebook for misinformation.

- The report calls Facebook a “significant source of climate misinformation.”

- IPG encouraged its clients, which include Coca-Cola and AmEx, to move their dollars to reliable news sources.

Ad giant IPG this month published a new report that says the major social networks have failed to contain the spread of false information and conspiracy theories, posing a threat to advertisers.

The report went to select clients of IPG’s ad-buying division Mediabrands, which include Coca-Cola, American Express, and T-Mobile.

“The Dis/Misinformation Challenge for Marketers” report was compiled by Magna, the research division of IPG Mediabrands, and comes as social groups plan more Facebook advertiser boycotts in the wake of critical reporting on Facebook by The Wall Street Journal.

While the report stopped short of recommending marketers pull ad spend from platforms, it encouraged them to consider moving their dollars away from platforms whose policies don’t reflect their values. IPG also says advertisers should pressure platforms to do better at banning disinformation and to use tools that promote reliable news sources, like NewsGuard.

“If brand values or corporate social responsibility principles do not align with the ability of any given platform to moderate their platform, then serious consideration should be given to whether that platform is appropriate for the brand,” the report states.

“We need to stop equivocating. It’s time to invest in platforms that are doing the right thing,” said Joshua Lowcock, chief digital and brand safety officer at IPG agency UM, who helped write the report.

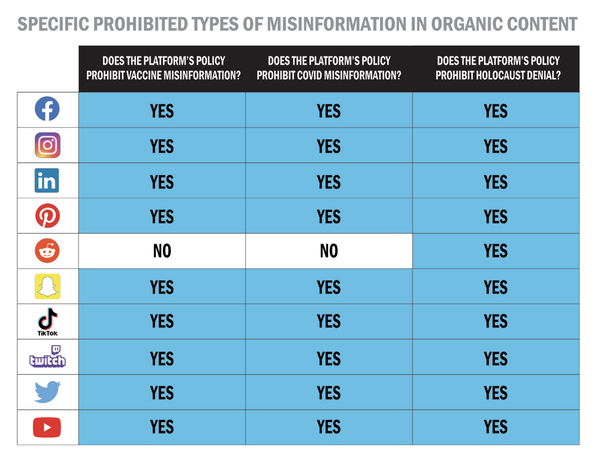

Social platforms have taken steps to address the spread of false information. But the report said none have come close to fully addressing the problem and blames their algorithms for consistently recommending content related to topics including Holocaust denial.

The report states that all platforms monetize false information in some way.

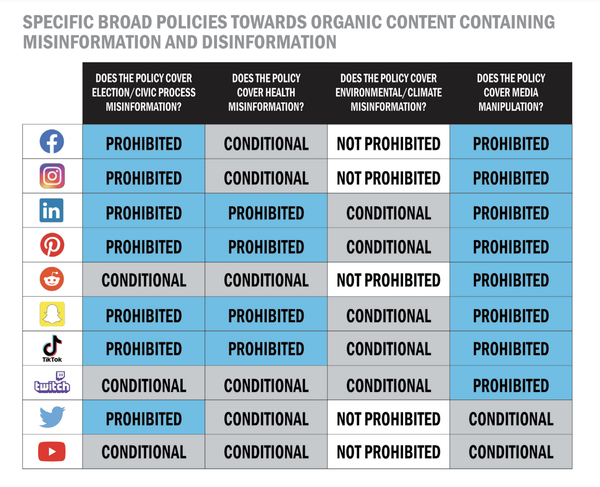

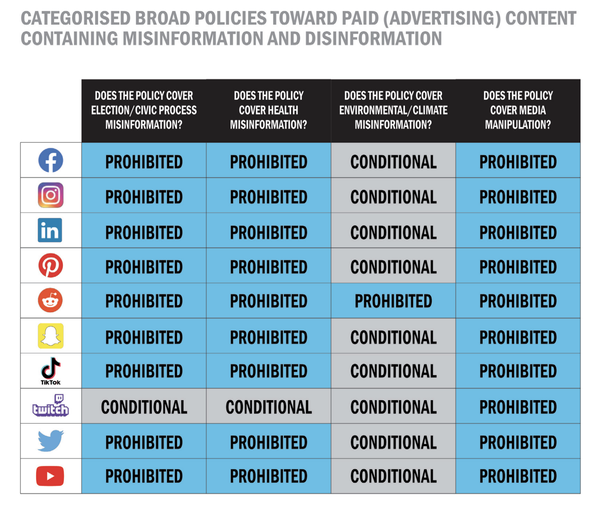

The report found a lack of consistency in how platforms approach misinformation and disinformation, a higher standard for paid content than user-generated posts, and a failure to protect the advertisers who fund the platforms when they’re subject to coordinated attacks.

“It is easier for a bad actor to spread misinformation organically about your brand than it is for you to market your brand,” the report states.

Top platforms have focused on hate speech and child safety but have not properly addressed the proliferation of intentionally misleading ads and organic posts about elections, vaccines, and climate change, Lowcock said. Media manipulation, or posts that alter images and videos, is another problem, he said.

As examples, Lowcock cited videos on Google-owned YouTube claiming 5G technology causes the coronavirus, which inspired the destruction of around 80 telecommunications towers in the UK in 2020. The report states that YouTube left these videos up for some time and ran ads on them due to ineffective safety controls.

Lowcock also said platforms like TikTok continue to host antisemitic content despite promising to control it. The report cites a 2021 study by the Anti-Defamation League which found that Facebook hosted antisemitic groups and posts several months after CEO Mark Zuckerberg said in late 2020 that he would ban them.

YouTube did not provide comment by publication time. Facebook and TikTok did not respond to requests for comment.

According to the report, only LinkedIn, Pinterest and Twitch prohibit general misinformation and disinformation among users and advertisers.

IPG

IPG

Social platforms’ policies are inconsistent when it comes to certain topics. The report said Reddit was the only platform in its study that bans ads that contain false information about climate change and cited a study concluding that Facebook made $86 million in 2020 from ads containing false climate-related claims.

The report also cited a 2020 study that found ads ran before climate misinformation videos on YouTube.

IPG

IPG

Ads don’t appear next to organic posts on Reddit, but Lowcock said its reliance on community moderators to handle misinformation about vaccines and the coronavirus still makes some advertisers wary of the platform.

Platforms have rolled out features to contain problematic content. The report cites Pinterest’s 2019 vaccine misinformation ban; Twitter’s new “Birdwatch” feature, which lets users flag false tweets; and Snap’s decision to ban former President Donald Trump based on his off-platform behavior.

Lowcock said platforms tend to address problems like 2020 election conspiracy theories when they are topical but otherwise ignore them, though, allowing false information to spread.

IPG