What Facebook knew about how it radicalized users

In summer 2019, a new Facebook user named Carol Smith signed up for the platform, describing herself as a politically conservative mother from Wilmington, North Carolina. Smith’s account indicated an interest in politics, parenting and Christianity and followed a few of her favorite brands, including Fox News and then-President Donald Trump.

Though Smith had never expressed interest in conspiracy theories, in just two days Facebook was recommending she join groups dedicated to QAnon, a sprawling and baseless conspiracy theory and movement that claimed Trump was secretly saving the world from a cabal of pedophiles and Satanists.

Smith didn’t follow the recommended QAnon groups, but whatever algorithm Facebook was using to determine how she should engage with the platform pushed ahead just the same. Within one week, Smith’s feed was full of groups and pages that had violated Facebook’s own rules, including those against hate speech and disinformation.

Smith wasn’t a real person. A researcher employed by Facebook invented the account, along with those of other fictitious “test users” in 2019 and 2020, as part of an experiment in studying the platform’s role in misinforming and polarizing users through its recommendations systems.

That researcher said Smith’s Facebook experience was “a barrage of extreme, conspiratorial, and graphic content.”

The body of research consistently found Facebook pushed some users into “rabbit holes,” increasingly narrow echo chambers where violent conspiracy theories thrived. People radicalized through these rabbit holes make up a small slice of total users, but at Facebook’s scale, that can mean millions of individuals.

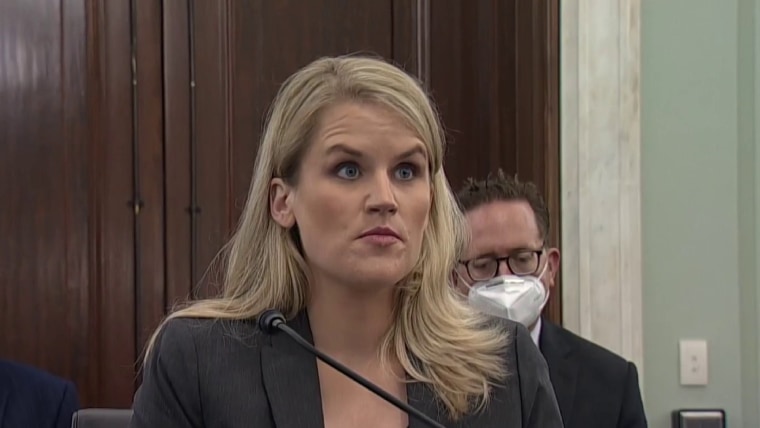

The findings, communicated in a report titled “Carol’s Journey to QAnon,” were among thousands of pages of documents included in disclosures made to the Securities and Exchange Commission and provided to Congress in redacted form by legal counsel for Frances Haugen, who worked as a Facebook product manager until May. Haugen is now asserting whistleblower status and has filed several specific complaints that Facebook puts profit over public safety. Earlier this month, she testified about her claims before a Senate subcommittee.

Versions of the disclosures — which redacted the names of researchers, including the author of “Carol’s Journey to QAnon” — were shared digitally and reviewed by a consortium of news organizations, including NBC News. The Wall Street Journal published a series of reports based on many of the documents last month.

“While this was a study of one hypothetical user, it is a perfect example of research the company does to improve our systems and helped inform our decision to remove QAnon from the platform,” a Facebook spokesperson said in a response to emailed questions.

Facebook CEO Mark Zuckerberg has broadly denied Haugen’s claims, defending his company’s “industry-leading research program” and its commitment to “identify important issues and work on them.” The documents released by Haugen partly support those claims, but they also highlight the frustrations of some of the employees engaged in that research.

Among Haugen’s disclosures are research, reports and internal posts that suggest Facebook has long known its algorithms and recommendation systems push some users to extremes. And while some managers and executives ignored the internal warnings, anti-vaccine groups, conspiracy theory movements and disinformation agents took advantage of their permissiveness, threatening public health, personal safety and democracy at large.

“These documents effectively confirm what outside researchers were saying for years prior, which was often dismissed by Facebook,” said Renée DiResta, technical research manager at the Stanford Internet Observatory and one of the earliest harbingers of the risks of Facebook’s recommendation algorithms.

Facebook’s own research shows how easily a relatively small group of users has been able to hijack the platform, and for DiResta, it settles any remaining question about Facebook’s role in the growth of conspiracy networks.

“Facebook literally helped facilitate a cult,” she said.

‘A pattern at Facebook’

For years, company researchers had been running experiments like Carol Smith’s to gauge the platform’s hand in radicalizing users, according to the documents seen by NBC News.

This internal work repeatedly found that recommendation tools pushed users into extremist groups, findings that helped inform policy changes and tweaks to recommendations and news feed rankings. Those rankings are a tentacled, ever-evolving system widely known as “the algorithm” that pushes content to users. But the research at that time stopped well short of inspiring any movement to change the groups and pages themselves.

That reluctance was indicative of “a pattern at Facebook,” Haugen told reporters this month. “They want the shortest path between their current policies and any action.”

“There is great hesitancy to proactively solve problems,” Haugen added.

A Facebook spokesperson disputed that the research had not pushed the company to act and pointed to changes to groups announced in March.

While QAnon followers committed real-world violence in 2019 and 2020, groups and pages related to the conspiracy theory skyrocketed, according to internal documents. The documents also show how teams inside Facebook took concrete steps to understand and address those issues — some of which employees saw as too little, too late.

By summer 2020, Facebook was hosting thousands of private QAnon groups and pages, with millions of members and followers, according to an unreleased internal investigation.

A year after the FBI designated QAnon as a potential domestic terrorist threat in the wake of standoffs, planned kidnappings, harassment campaigns and shootings, Facebook labeled QAnon a “Violence Inciting Conspiracy Network” and banned it from the platform, along with militias and other violent social movements. A small team working across several of Facebook’s departments found its platforms had hosted hundreds of ads on Facebook and Instagram worth thousands of dollars and millions of views, “praising, supporting, or representing” the conspiracy theory.

The Facebook spokesperson said in an email that the company has “taken a more aggressive approach in how we reduce content that is likely to violate our policies, in addition to not recommending Groups, Pages or people that regularly post content that is likely to violate our policies.”

For many employees inside Facebook, the enforcement came too late, according to posts left on Workplace, the company’s internal message board.

“We’ve known for over a year now that our recommendation systems can very quickly lead users down the path to conspiracy theories and groups,” one integrity researcher, whose name had been redacted, wrote in a post announcing she was leaving the company. “This fringe group has grown to national prominence, with QAnon congressional candidates and QAnon hashtags and groups trending in the mainstream. We were willing to act only * after * things had spiraled into a dire state.”

‘We should be concerned’

While Facebook’s ban initially appeared effective, a problem remained: The removal of groups and pages didn’t wipe out QAnon’s most extreme followers, who continued to organize on the platform.

“There was enough evidence to raise red flags in the expert community that Facebook and other platforms failed to address QAnon’s violent extremist dimension,” said Marc-André Argentino, a research fellow at King’s College London’s International Centre for the Study of Radicalisation, who has extensively studied QAnon.

Believers simply rebranded as anti-child-trafficking groups or migrated to other communities, including those around the anti-vaccine movement.

It was a natural fit. Researchers inside Facebook studying the platform’s niche communities found violent conspiratorial beliefs to be connected to Covid-19 vaccine hesitancy. In one study, researchers found QAnon community members were also highly concentrated in anti-vaccine communities. Anti-vaccine influencers had similarly embraced the opportunity of the pandemic and used Facebook’s features like groups and livestreaming to grow their movements.

“We do not know if QAnon created the preconditions for vaccine hesitancy beliefs,” researchers wrote. “It may not matter either way. We should be concerned about people affected by both problems.”

QAnon believers also jumped to groups promoting former President Donald Trump’s false claim that the 2020 election was stolen, groups that trafficked in a hodgepodge of baseless conspiracy theories alleging voters, Democrats and election officials were somehow cheating Trump out of a second term. This new coalition, largely organized on Facebook, ultimately stormed the U.S. Capitol on Jan. 6, according to a report included in the document trove and first reported by BuzzFeed News in April.

These conspiracy groups had become the fastest-growing groups on Facebook, according to the report, but Facebook wasn’t able to control their “meteoric growth,” the researchers wrote, “because we were looking at each entity individually, rather than as a cohesive movement.” A Facebook spokesperson told BuzzFeed News it took many steps to limit election misinformation but that it was unable to catch everything.

Facebook’s enforcement was “piecemeal,” the team of researchers wrote, noting, “we’re building tools and protocols and having policy discussions to help us do this better next time.”

‘A head-heavy problem’

The attack on the Capitol invited harsh self-reflection from employees.

One team invoked the lessons learned during QAnon’s moment to warn about permissiveness with anti-vaccine groups and content, which researchers found comprised up to half of all vaccine content impressions on the platform.

“In rapidly-developing situations, we’ve often taken minimal action initially due to a combination of policy and product limitations making it extremely challenging to design, get approval for, and roll out new interventions quickly,” the report said. QAnon was offered as an example of a time when Facebook was “prompted by societal outcry at the resulting harms to implement entity takedowns” for a crisis on which “we initially took limited or no action.”

The effort to overturn the election also invigorated efforts to clean up the platform in a more proactive way.

Facebook’s “Dangerous Content” team formed a working group in early 2021 to figure out ways to deal with the kind of users who had been a challenge for Facebook: communities including QAnon, Covid-denialists and the misogynist incel movement that weren’t obvious hate or terrorism groups but that, by their nature, posed a risk to the safety of individuals and societies.

The focus wasn’t to eradicate them, but to curb the growth of these newly branded “harmful topic communities,” with the same algorithmic tools that had allowed them to grow out of control.

“We know how to detect and remove harmful content, adversarial actors, and malicious coordinated networks, but we have yet to understand the added harms associated with the formation of harmful communities, as well as how to deal with them,” the team wrote in a 2021 report.

In a February report, they got creative. An integrity team detailed an internal system meant to measure and protect users against societal harms including radicalization, polarization and discrimination that its own recommendation systems had helped cause. Building on a previous research effort dubbed “Project Rabbithole,” the new program was dubbed “Drebbel.” Cornelis Drebbel was a 17th-century Dutch engineer known for inventing the first navigable submarine and the first thermostat.

The Drebbel group was tasked with discovering and ultimately stopping the paths that moved users toward harmful content on Facebook and Instagram, including in anti-vaccine and QAnon groups.

A post from the Drebbel team praised the earlier research on test users. “We believe Drebbel will be able to scale this up significantly,” they wrote.

“Group joins can be an important signal and pathway for people going towards harmful and disruptive communities,” the group stated in a post to Workplace, Facebook’s internal message board. “Disrupting this path can prevent further harm.”

The Drebbel group features prominently in Facebook’s “Deamplification Roadmap,” a multistep plan published on the company Workplace on Jan. 6 that includes a complete audit of recommendation algorithms.

In March, the Drebbel group posted about its progress via a study and suggested a way forward. If researchers could systematically identify the “gateway groups,” those that fed into anti-vaccination and QAnon communities, they wrote, maybe Facebook could put up roadblocks to keep people from falling through the rabbit hole.

The Drebbel “Gateway Groups” study looked back at a collection of QAnon and anti-vaccine groups that had been removed for violating policies around misinformation and violence and incitement. It used the membership of these purged groups to study how users had been pulled in. Drebbel identified 5,931 QAnon groups with 2.2 million total members, half of which joined through so-called gateway groups. For 913 anti-vaccination groups with 1.7 million members, the study identified 1 million had joined via gateway groups. (Facebook has said it recognizes the need to do more.)

Facebook integrity employees warned in an earlier report that anti-vaccine groups could become more extreme.

“Expect to see a bridge between online and offline world,” the report said. “We might see motivated users create sub-communities with other highly motivated users to plan action to stop vaccination.”

A separate cross-department group reported this year that vaccine hesitancy in the U.S. “closely resembled” QAnon and Stop the Steal movements, “primarily driven by authentic actors and community building.”

“We found, like many problems at FB,” the team wrote, “that this is a head-heavy problem with a relatively few number of actors creating a large percentage of the content and growth.”

The Facebook spokesperson said the company had “focused on outcomes” in relation to Covid-19 and that it had seen vaccine hesitancy decline by 50 percent, according to a survey it conducted with Carnegie Mellon University and the University of Maryland.

Whether Facebook’s newest integrity initiatives will be able to stop the next dangerous conspiracy theory movement or the violent organization of existing movements remains to be seen. But their policy recommendations may carry more weight now that the violence on Jan. 6 laid bare the outsize influence and dangers of even the smallest extremist communities and the misinformation that fuels them.

“The power of community, when based on harmful topics or ideologies, potentially poses a greater threat to our users than any single piece of content, adversarial actor, or malicious network,” a 2021 report concluded.

The Facebook spokesperson said the recommendations in the “Deamplification Roadmap” are on track: “This is important work and we have a long track record of using our research to inform changes to our apps,” the spokesperson wrote. “Drebbel is consistent with this approach, and its research helped inform our decision this year to permanently stop recommending civic, political or news Groups on our platforms. We are proud of this work and we expect it to continue to inform product and policy decisions going forward.”

CORRECTION (Oct. 22, 2021, 7:06 p.m. ET): A previous version of this article misstated the purpose of a study conducted by Facebook’s Drebbel team. It studied groups that Facebook had removed, not those that were currently active.