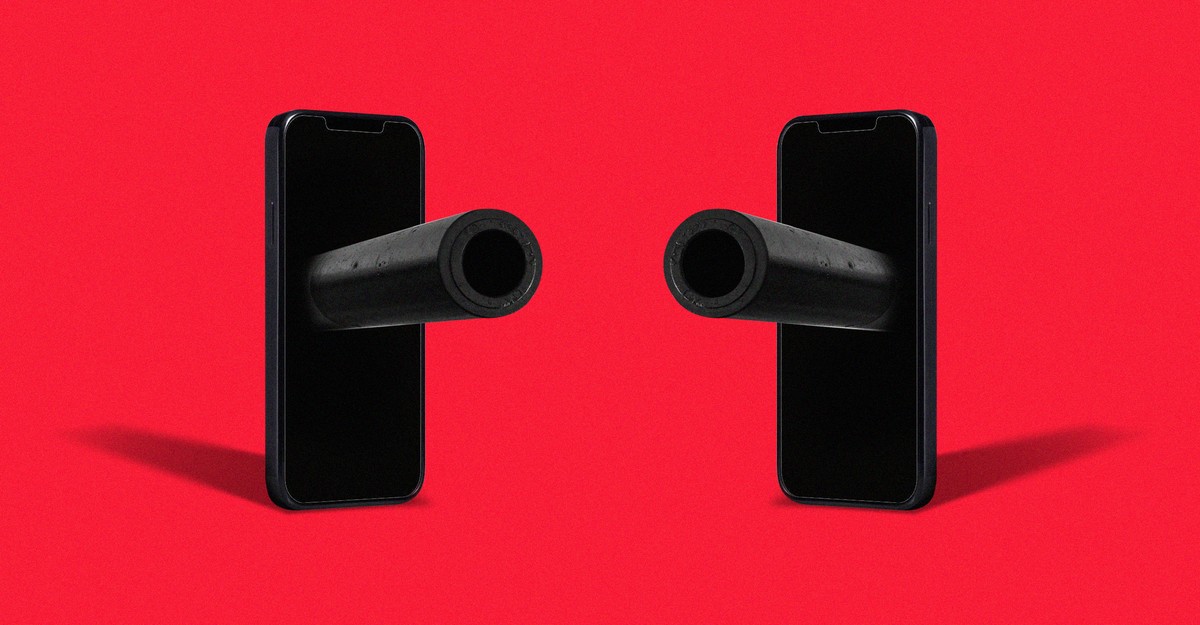

The Information War Between Ukraine and Russia Is Far From Over

Sign up for Charlie’s newsletter, Galaxy Brain, here.

Russia’s invasion of Ukraine is not yet two weeks old and yet a dozen headlines from major media outlets now suggest that Ukraine is “winning the information war” across much of the world (Russia and China may be notable exceptions). A primary reason for Ukraine’s success, they argue, is Ukrainian President Volodymyr Zelensky, a former actor whose leadership style, media savvy, and earnest, emotional appeals have helped channel sympathy and aid for his country. He is, as one headline put it, “a respected war hero and a cultural icon.”

Zelensky’s performance of wartime leadership has been remarkable for the way it has made use of a new media environment and shaped public opinion. Still, we should be wary of declaring winners and losers of the information war so soon. Like the war on the ground, its scale is dizzying and dynamic: Momentary success in online conflict is not necessarily indicative of future success. I’ve been struck by how the conflict’s digital footprint has both confirmed and upended some long-held understandings and expectations of information warfare. Over the past half decade, activists, journalists, and academics around the world have studied and documented how information flows across the internet and how it is manipulated. Over the past two weeks, some have learned that their conclusions are of less use in wartime.

The first surprise is that, despite a lot of noise, a strong signal has been coming from the front lines. On an internet where propaganda and conspiracy theorizing flourish, you’d expect a land war involving Russia to result in a bewildering barrage of online garbage and falsehoods that render the current state of the conflict truly unknowable. But documentation of the actual military action has emerged with clarity throughout the West. We’ve seen plenty of examples of misinformation, disinformation, and media manipulation: Opportunists are attempting to pass off historical clips, or even video gameplay, as fresh war footage, for example. Yet digital fakes are also being debunked in real time, while real footage is verified by a cohort of trained investigators from newsrooms and volunteer organizations. Across apps such as Telegram, there are channels with thousands of people—an “army of hackers,” as a Forbes article calls them—attempting to report and take down information from Russian state media and coordinate cyberattacks against Russia.

“There’ve been moments at this early juncture where it’s felt like the first time the information war was won by the disinformation fighters,” Eliot Higgins, one of the founders of the Bellingcat project, told me this week. Higgins, who got his start covering global conflicts online in 2012 during the Syrian war, helped pioneer the field of open-source investigation, which uses public footage and imagery posted on social media to find and organize evidence of fighting and atrocities. Higgins and Bellingcat have focused much of their attention on Russia and Ukraine since 2014, when they began looking into the downing of Malaysia Airlines Flight 17 in Donetsk Oblast. “There is a preexisting network in this region that stretches back to 2014 and that has not gone away,” he said. “Journalists are following this network; policy makers are following it. And so you have videos rapidly geolocated and preserved for war-crimes evidence, and it makes it extremely hard for false Russian narratives to take hold.” Higgins believes that Bellingcat’s work has influenced others too. Many newsrooms now incorporate open-source investigation into their war reporting. (The New York Times’ visual-investigations team is one notable example.)

Jane Lytvynenko, a senior research fellow at the Harvard Kennedy School’s Shorenstein Center on Media, Politics and Public Policy, argues that online audiences, especially those who monitor breaking news, are a bit more skeptical than they used to be, and better able to spot influence attempts. She told me that open-source investigators like those working at and alongside Bellingcat “have made Russia’s motivations much clearer” than Vladimir Putin would like. “Not only can we untangle their false-flag operations and disinformation, but we can show how poorly executed these propaganda attempts really are,” she said.

That’s the second surprise: Russia’s online propaganda and influence apparatus is not nearly as sophisticated or effective with non-Russian audiences as many thought (at least to this point). Russia’s early attempts to falsely portray Ukraine as a nation of neo-Nazis have been lazy, recycling old material, Higgins told me. “There’s been so much talk of how amazing Russia is at disinformation. But we see it’s not that they were good, but that the rest of us weren’t prepared for it. We weren’t good at verifying or debunking, and we confused our own incompetence for Russian genius.” Still, some Kremlin fake-news campaigns have been successful, and it’s certainly possible that Russia’s propaganda apparatus was merely caught flat-footed by the invasion, and hasn’t yet revved up.

Tldr; Western analysts, please stop declaring that #Ukraine is winning the narrative war. That battle is just getting started.

— Elise Thomas (@elisethoma5) March 3, 2022

And then there’s the opposite side to all of this: the information war Russia is waging on its own citizens—both the information it broadcasts and the outside information it blocks from its public. Of all the elements of this crisis, this may be the most difficult for outsiders to evaluate effectively. It is also a reminder to be humble in our predictions—what might appear to our eyes as ineffective propaganda may read differently to audiences at home. Despite the remarkable footage of Russians protesting the war, reporting also suggests that Kremlin messaging has deceived some Russians into denying the very existence of the conflict.

We may be seeing just how hard it is to wage a successful information war when you are very clearly the villain. Russia’s invasion and the harrowing images of destruction of Ukrainian cities have created a global crisis and a moral consensus that is unusual in the internet era. Lytvynenko said that Ukrainians have also excelled at telling the world what’s happening to them. Their message of resilience, she argued, has been amplified by a large Ukrainian diaspora across the web. “Right now, during a shooting war, you would expect to see Russia attempting to create chaos and confusion and to demoralize the people in the war zone,” Renée DiResta, a computational-propaganda expert, told me this week. “And there is both a leader who is very adeptly keeping up morale and maintaining trust, and a cadre of volunteers working against that.”

Universally accepted narratives can be fleeting, though, especially when media scrutiny fades. The world is tuning in to the hourly news from Ukraine right now, says Mike Caulfield, a researcher at the University of Washington’s Center for an Informed Public, but attention is always fickle. “I do worry, when the attention isn’t so intense, there could be more attempts to muddle the narrative,” he told me. Caulfield pointed to the events of January 6, and argued that the constant airing of footage initially led to a widespread condemnation of the insurgency, but that, with some distance from that intense coverage, many participants and Republicans tried to rewrite the story.

Ukraine, of course, has its own propaganda aims. Official government accounts have helped amplify stories of questionable veracity, such as a debunked anecdote about the reported deaths of the soldiers stationed at Snake Island. Some of these stories have been amplified uncritically by those affected by the scenes coming from Ukraine, leading to what The New York Times has called a “blend” of fact and mythmaking. One Russia and Ukraine expert suggested that large chunks of the information we are seeing across our feeds at any given moment are “unverified or just flat out false.” Errors in our understanding of what is happening on the ground may, at times, be less a product of malice or incompetence than of the size of the conflict and the magnitude of our biases. For example, a recent video allegedly showing a Ukrainian man smoking a cigarette and calmly carrying an unexploded land mine to safety quickly made the rounds on Twitter. While some observers delighted in the man’s bravery and nonchalance, others saw evidence of impending doom.

Obsessing over winners in an information war can be fraught, even dangerous. Peter Pomerantsev, a senior fellow at John Hopkins University’s SNF Agora Institute and a scholar of Russian propaganda, argued in 2019 that the information-war lens risks “reinforcing a world view the Kremlin wants—that all information is just manipulation.” On Twitter, Pomerantsev speculated that too much focus on information warfare could flatten what he sees as a crucial difference between Russian and Ukrainian approaches to information. “Collaborative communication is when you engage people, treat them as equals,” he wrote. “Sure the Ukrainian army do all sort of psy-ops to survive. But Ze[lensky] is treating people as equals, trying to engage and inspire them—that’s not ‘information war’. It’s the opposite.”

Brandon Silverman, the creator of the digital-transparency tool CrowdTangle, has been studying and tracking the way that information moves around the internet’s biggest platforms. He’s learned to be extremely cautious in making any broad judgments about what he’s seeing online, he told me. When I asked for his early observations on the conflict’s digital side, he demurred without having the hindsight of a more forensic examination. “I’m not going to feel like I have an epistemically confident understanding of what has happened for probably about six to nine months,” he said.

Silverman is focused on the nuances of the information war from the platform side, where social networks have begun to shut out Russian state-media channels such as RT. He’s less concerned about the content and far more worried about process and transparency. Social-media platforms have been taking down and fully deleting Russian state media channels, he warned, instead of archiving them to study later. “If you are looking back a year from now, researchers and historians and those investigating war crimes will want to know, what were these channels doing leading up to this invasion?” he said. “How can we learn how effective a piece of propaganda was if we can’t go back and study it?”

Speaking with these experts convinced me that we may well have more real-time access to accurate information about conditions on the ground than we have had for any other conflict, but that the status of an information war is much larger than defining the aggressors and the victims. We should not adopt a dangerous post-truth mindset, but we must remember that what is verifiable is likely still surpassed by what we don’t yet know or cannot see. In the middle of a so-called information war, as in the middle of a ground war, it is easy to make confident judgments too early and be seduced by simple narratives. What seems to make sense now may not make sense tomorrow. And the footage we see, no matter how indelible, could be interpreted quite differently through another’s eyes.