Science has finally cracked the mystery of why so many people believe in conspiracy theories

When it comes to the spread of cockamamie conspiracy theories, Twitter was a maximum viable product long before Elon Musk paid $44 billion for the keys. But as soon as he took the wheel, Musk removed many of the guardrails Twitter had put in place to keep the craziness in check. Anti-vaxxers used an athlete’s collapse during a game to revive claims that COVID-19 vaccines kill people. (They don’t.) Freelance journalists spun long threads purporting to show that Twitter secretly supported Democrats in 2020. (It didn’t.) Musk himself insinuated that the attack on Nancy Pelosi’s husband was carried out by a jealous boyfriend. (Nope.) Like a red thread connecting clippings on Twitter’s giant whiteboard, conspiratorial ideation spread far and wide.

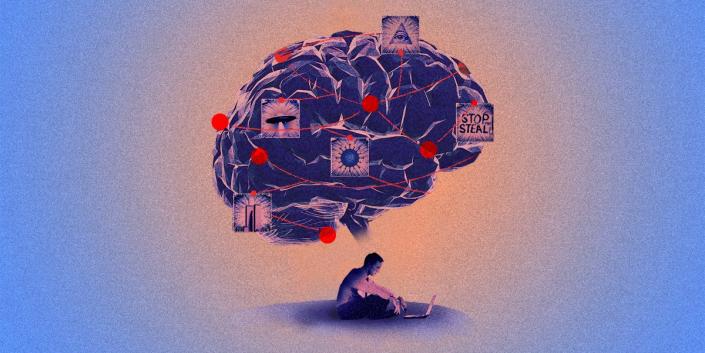

By some measures more than half of Americans believe at least one tale of a secret cabal influencing events. Some are more plausible than others; a few are even true. But most — from classics like the faked moon landing to new-school stuff like 5G cell towers causing COVID — defy science and logic. And while social-media platforms like Twitter and Meta may help deranged conspiracy theories metastasize, a fundamental question remains: Why does anyone fall for stuff like that?

Social scientists are closing in on some answers. The personality traits known as the “Dark Triad” — that’s narcissism, psychopathy, and a tendency to see the world in black-or-white terms — play a part. So do political beliefs, particularly populism and a tolerance for political violence. Cognitive biases, like believing only evidence that confirms what you already think, also make people more vulnerable.

But according to new research, it isn’t ignorance that makes people most likely to buy into conspiratorial thinking, or social isolation or mental illness. It’s a far more prevalent and pesky personality quirk: overconfidence.

The more you think you’re right all the time, a new study suggests, the more likely you are to buy conspiracy theories, regardless of the evidence. That’d be bad enough if it applied only to that one know-it-all cousin you see every Thanksgiving. But given how both politics and business reward a faith in one’s own genius, the news is way worse. Some of the same people this hypothesis predicts will be most prone to conspiracy thinking also have the biggest megaphones — like an ex-president who believes he’s never wrong, and a CEO who thinks that building expensive cars makes him some sort of visionary. It’d be better, or at least more reassuring, if conspiracy theories were fueled by dumb yahoos rather than self-centered monsters. Because arrogance, as history has repeatedly demonstrated, is a lot harder to stamp out than stupidity.

Have faith in yourself (but not too much)

A decade or so ago, when Gordon Pennycook was in graduate school and wanted to study conspiracist thinking, a small but powerful group of unelected people got together to stop him. It wasn’t a conspiracy as such. It was just that back then, the people who approved studies and awarded grants didn’t think that “epistemically suspect beliefs” — things science can easily disprove, like astrology or paranormal abilities — were deserving of serious scholarship. “It was always a kind of fringe thing,” Pennycook says. He ended up looking into misinformation instead.

Still, the warning signs that conspiracy theories were a serious threat to the body politic go way back. A lot of present-day antisemitism can be traced back to a 19th-century forgery purporting to describe a secret meeting of a Jewish cabal known as the Elders of Zion (a forgery based in part on yet another antisemitic conspiracy theory from England in the 1100s and re-upped by the industrialist Henry Ford in the 1920s). In 1962, the historian Richard Hofstadter warned against what he called the “paranoid style” of America’s radical right and its use of conspiracy fears to whip up support. Still, most scientists thought conspiracy theories weren’t worth their time, the province of weirdos connecting JFK’s death to lizard aliens.

Then the weirdos started gaining ground. Bill Clinton, they claimed, murdered Vince Foster. George W. Bush had advance knowledge of the September 11 attacks. Barack Obama wasn’t born in the United States. Belief in baseless theories could lead to actual violence — burning cellphone towers because of that COVID thing, or attacking the Capitol because Hugo Chávez rigged the US election. By the time of the January 6 insurrection, Pennycook had already switched to studying conspiracy.

It still isn’t entirely clear whether more people believe conspiracy theories today. Maybe there are just more theories to believe. But researchers pretty much agree that crackpot ideas are playing a far more significant role in politics and culture, and they have a flurry of hypotheses about what’s going on. People who believe in conspiracies tend to be more dogmatic, and unable to handle disagreement well. They also rate higher on those Dark Triad personality traits. They’re not stark raving mad, just a tick more antisocial.

But at this point, there are just way too many believers in cuckoo theories running around for the explanation to be ignorance or mental illness. “Throughout most of the 1970s, 80% — that’s eight zero — believed Kennedy was killed by a conspiracy,” says Joseph Uscinski, a political scientist at the University of Miami. “Would we say all of those people were stupid or had a serious psychological problem? Of course not.”

Which brings us to the overconfidence thing. Pennycook and his collaborators had been looking at the ways intuition could lead people astray. They hypothesized that conspiratorial thinkers overindex for their own intuitive leaps — that they are, to put it bluntly, lazy. Most don’t bother to “do their own research,” and those who do believe only things that confirm their original conclusions.

“Open-minded thinking isn’t just engaging in effortful thought,” Pennycook observes. “It’s doing so to evaluate evidence that’s directed toward what’s true or false — to actually question your intuitions.” Pennycook wanted to know why someone wouldn’t do that. Maybe it was simple overconfidence in their own judgment.

Sometimes, of course, people are justified in their confidence; after four decades in journalism, for example, I’m right to be confident in my ability to type fast. But then there’s what’s known as “dispositional” overconfidence — a person’s sense that they are just practically perfect in every way. How could Pennycook’s team tell the difference?

Their solution was pretty slick. They showed more than 1,000 people a set of six images blurred beyond recognition and then asked the subjects what the pictures were. Baseball player? Chimpanzee? Click the box. The researchers basically forced the subjects to guess. Then they asked them to self-assess how well they did on the test. People who thought they nailed it were the dispositional ones. “Sometimes you’re right to be confident,” Pennycook says. “In this case, there was no reason for people to be confident.”

Sure enough, Pennycook found that overconfidence correlated significantly with belief in conspiracy theories. “This is something that’s kind of fundamental,” he says. “If you have an actual, underlying, generalized overconfidence, that will impact the way you evaluate things in the world.”

The results aren’t peer-reviewed yet; the paper is still a preprint. But they sure feel true (confirmation bias aside). From your blowhard cousin to Marjorie Taylor Greene, it seems as if every conspiracist shares one common trait: a supreme smugness in their own infallibility. That’s how it sounds every time Donald Trump opens his mouth. And inside accounts of Elon Musk’s management at Twitter suggest he may also be suffering from similar delusions.

“That’s often what happens with these really wealthy, powerful people who sort of fail upwards,” says Joe Vitriol, a psychologist at Lehigh University who has studied the way people overestimate their own expertise. “Musk is not operating in an environment in which he’s accountable for the mistakes he makes, or in which others criticize the things he says or does.”

An epidemic of overconfidence

Pennycook isn’t the first researcher to propose a link between self-regard and epistemically suspect beliefs. Anyone who has attended a corporate meeting has experienced the Dunning-Kruger effect — the way those who know the least tend to assume they know the most. And studies by Vitriol and others have found a correlation between conspiracy thinking and the illusion of explanatory depth — when people who possess only a superficial understanding of how something works overestimate their knowledge of the details.

But what makes Pennycook’s finding significant is the way it covers all the different flavors of conspiracists. Maybe some people think their nominal expertise in one domain extends to expertise about everything. Maybe others actually believe the conspiracy theories they spread, or simply can’t be bothered to check them out. Maybe they define “truth” legalistically, as anything people can be convinced of, instead of something objectively veridical. Regardless, they trust their intuition, even though they shouldn’t. Overconfidence could explain it all.

Pennycook’s findings also suggest an explanation for why conspiracy theories have become so widely accepted. Supremely overconfident people are often the ones who get handed piles of money and a microphone. That doesn’t just afford them the means to spread their baseless notions about Democrats running an international child sex-trafficking ring out of the basement of a pizza parlor, or Sandy Hook being a hoax. It also connects them to an audience that shares and admires their overweening arrogance. To many Americans, Pennycook suggests, the overconfidence of a Musk or a Trump isn’t a bug. It’s a feature.

It’s not necessarily unreasonable to believe in dangerous conspiracies. The US government really did withhold medical treatment from Black men in the Tuskegee trial. Richard Nixon really did cover up an attempted burglary at The Watergate Hotel. Jeffrey Epstein really did force girls to have sex with his powerful friends. Transnational oil companies really did hide how much they knew about climate change.

So distinguishing between plausible and implausible conspiracies isn’t easy. And we might be more likely to fall for the implausible ones if they’re being spouted by people we trust. “The same thing is true for you,” Pennycook tells me. “If you hear a scientist or a fellow journalist at a respected outlet, you say, ‘This is someone I can trust.’ And the reason you trust them is that they’re at a respected outlet. But the problem is, people are not that discerning. Whether the person says something they agree with becomes the reason they trust them. Then, when the person says something they’re not sure about, they tend to trust that, too.”

The next step, or course, is to figure out how to fight the spread of conspiratorial nonsense. Pennycook is trying; he spent last year working at Google to curb misinformation; his frequent collaborator David Rand has worked with Facebook. They had some meetings with TikTok, too. That pop-up asking whether you want to read the article before sharing it? That was them.

And what about the bird site? “Twitter? Well, that’s another thing altogether,” Pennycook says. He and Rand worked on the crowdsource fact-check function called Community Notes. But now? “It’s all in flux, thanks to Elon Musk.”

But Pennycook’s new study suggests that the problem of conspiracy theories runs far deeper — and may prove far more difficult to solve — than simply tweaking a social-media algorithm or two. “How are you going to fix overconfidence? The people who are overconfident don’t think there’s a problem to be fixed,” he says. “I haven’t come up with a solution for that yet.”

Adam Rogers is a senior correspondent at Insider.

Read the original article on Business Insider