Yet more evidence that we physicians need to clean up our act

A couple of years ago, I wrote about how so many of my colleagues seemed shocked at the emergence of so many antivax physicians, even asking: Why is anyone surprised that there are so many antivax physicians? At the risk of coming across as saying, “I told you so,” I basically told you so then, noting that those of us who have been writing about the antivaccine movement knew that none of this was new, that, contrary to what so many of my colleagues (and, truth be told, most pundits) appeared to think, prior to the pandemic far too many physicians held antivax views, a number that has only increased since 2020. Basically, the prevalence of antivax physicians only seemed new to my colleagues because they hadn’t been paying attention before the pandemic and had thus been able to falsely reassure themselves that the antivax quack problem in our profession was just Andrew Wakefield and maybe a handful of cranks and quacks. Of course, my response to that dismissal was always to ask why state medical boards had long been so toothless—and they have long been toothless—with respect to policing our profession and disciplining antivax quacks—indeed disciplining all quacks, which state medical boards have long failed to do (e.g., see the case of Dr. Stanislaw Burzynski, who has been preying on desperate cancer patients since the late 1970s, mostly unmolested). COVID-19 just laid bare how toothless state medical boards have long been with respect to protecting the public from quacks, antivax or otherwise.

“You really think it’s all new.

“You really think about it too,”

The old man scoffed as he spoke to me,

“I’ll tell you a thing or two.“The Clash, Something About England (1981)

Last week, a study further reinforcing just how badly physicians as a profession have failed our patients and society was published in PNAS Nexus, Perceived experts are prevalent and influential within an antivaccine community on Twitter. Ironically, the study’s lead author, Mallory J. Harris, is affiliated with the Department of Biology at Stanford. Why “ironic”? Simple. As we have documented here time and time again, since the pandemic, Stanford University has become one of the foremost institutions harboring prominent academics who have minimized the seriousness and threat of COVID-19, advocated for “let ‘er rip” approaches to the pandemic in a futile effort to achieve “natural herd immunity” (Great Barrington Declaration, anyone?), and even promoted outright antivaccine misinformation. Whether it’s formerly admired academic (by me, anyway) John Ioannidis grossly underestimating the infection fatality rate (IFR) of SARS-CoV-2, accusing doctors of “just going crazy and intubating patients who did not have to be intubated” (and refusing to admit his mistake), and later being reduced to treating a satirical publication index as though it were real in order to attack his critics or Dr. Jay Bhattacharya championing “natural herd immunity” as the solution to the pandemic, Stanford has not exactly covered itself with glory over the last four years. Dr. Bhattacharya, in particular, has gone full quack. He now has a podcast, The Illusion of Consensus, in which he gives credulous interviews to antivaxxers and COVID-19 quacks. He has even featured “debates” on his website between antivaxxers over whether COVID-19 vaccines have killed 17 million, as well as interviews with Kevin McKernan (a key promoter of the “DNA contamination” of COVID-19 vaccines narrative) and Jessica Rose, known for dumpster-diving into the VAERS database to dredge up all sorts of false “injuries” and deaths due to COVID-19 vaccines.

So it’s great to see something fruitful coming out of Stanford, specifically an attempt to quantify how prevalent antivax physicians are on one social media platform, specifically, X, the platform formerly known as Twitter and that the Stanford Center for Innovation in Global Health is still fighting the good fight.

Let’s see what Harris and coinvestigators found.

Physician experts on both sides, but with a big difference

From my perspective, what I welcomed about this study is that it tried to quantify a phenomenon that I had long been aware of going back to the dawn of social media, specifically the prevalence of antivax “experts,” legitimate physicians and scientists who, for whatever reason, had gone down the antivax conspiracy rabbit hole and as a result started spending a lot of time online promoting antivax misinformation. (Before social media, it was blogs and even Usenet, as the case of “quack tycoon” Dr. Joe Mercola demonstrates.) Obviously, among these Andrew Wakefield is the granddaddy of them all, at least in the modern antivax movement, although rabidly antivax physicians like “medical heretic” Dr. Robert S. Mendelsohn predated Wakefield by decades.

In the introduction, Harris et al note:

Information consumers often use markers of credibility to assess different sources (25, 26). Specifically, prestige bias describes a heuristic where one preferentially learns from individuals who present signals associated with higher status (e.g. educational and professional credentials) (27–29). Importantly, prestige-biased learning relies on signifiers of expertise that may or may not be accurate or correspond with actual competence in a given domain (25, 30). Therefore, we will refer to perceived experts to denote individuals whose profiles contain signals that have been shown experimentally to increase the likelihood that an individual is viewed as an expert on COVID-19 vaccines (31), although credentials may be misrepresented or misunderstood (user profiles may be deceptive or ambiguous, audiences may not understand the domain specificity of expertise, and platform design may impair assessments of expertise if partial profile information is displayed alongside posts). We focus on the understudied role of perceived experts as potential antivaccine influencers who accrue influence through prestige bias (4, 13). Medical professionals, biomedical scientists, and organizations are trusted sources of medical information who may be especially effective at persuading people to get vaccinated and correcting misconceptions about disease and vaccines (29, 32–35), suggesting that prestige bias may apply to vaccination decisions, including for COVID-19 vaccines (36, 37).

I note that this study examines both antivax “experts” and legitimate experts who are science-based and thus provaccine. The authors note that no systematic attempt had yet been made before their study to quantify the effect of these antivax “experts” on influencing the public, pointing out:

In addition to signaling expertise in their profiles, perceived experts may behave like biomedical experts by making scientific arguments and sharing scientific links but also propagate misinformation by sharing unreliable sources. Antivaccine films frequently utilize medical imagery and emphasize the scientific authority of perceived experts who appear in the films (42, 43, 48). Although vaccine opponents reject scientific consensus, many still value the brand of science and engage with peer reviewed literature (49). Scientific articles are routinely shared by Twitter users who oppose vaccines and other public health measures (e.g. masks), but sources may be presented in a selective or misleading manner (40, 49–53). At the same time, misinformation claims from sources that often fail fact checks (i.e. low-quality sources) are pervasive within antivaccine communities, where they may exacerbate vaccine hesitancy (6, 18, 54, 55). To understand the types of evidence perceived experts use to support their arguments, we ask (RQ2): How often do perceived experts in the antivaccine community share misinformation and academic sources relative to other users?

As I like to say, antivaxxers, especially antivax scientists and physicians, use the peer-reviewed biomedical literature the same way that an inebriated person uses a lamppost at night, for support rather than for illumination. As we here at SBM have discussed time and time again, they frequently misinterpret and/or cherry pick studies to support their position, often highlighting low quality studies from antivax “investigators” published in dodgy, bottom-feeding or even predatory journals.

Before we get to the actual meat of the study, I can’t help but highlight one more quote from the study about why it matters, why “experts” are so influential:

In general, perceived expertise may increase user influence within the provaccine and antivaccine communities. Although vaccine opponents express distrust in scientific institutions and the medical community writ large, they simultaneously embrace perceived experts who oppose the scientific consensus as heroes and trusted sources (10, 41, 43, 48). Medical misinformation claims attributed to perceived experts were some of the most popular and durable topics within misinformation communities on Twitter during the COVID-19 pandemic (12, 63). In fact, compared to individuals who agree with the scientific consensus, individuals who hold counter-consensus positions may actually be more likely to engage with perceived experts that align with their stances (51, 64, 65). This expectation is based on experimental work on source-message incongruence, which suggests that messages are more persuasive when they come from a surprising source (66–68). This phenomenon may extend to the case where perceived experts depart from the expected position of supporting vaccination. In an experiment where participants were presented with claims from different sources about a fictional vaccine, messages from doctors opposing vaccination were especially influential and were transmitted more effectively than provaccine messages from doctors (69).

Medical misinformation claims from “experts” were indeed some of the most popular and, unfortunately, durable topics within misinformation communities during the pandemic. Indeed, if that weren’t the case, we here at SBM probably would have lost half (or more) of our blogging topics, because the list of physician “experts” who undermined anything resembling the scientific consensus has been large and loud, including, for example, Drs. Peter McCullough, Robert “inventor of mRNA vaccines” Malone, William “Turbo Cancer” Makis, and Ryan Cole, the entire crew of physicians at the Frontline COVID-19 Critical Care (FLCCC) Alliance, America’s Frontline Doctors, the Association of American Physicians and Surgeons (AAPS, the granddaddy of all crank and antivax pseudomedical organizations and John Birch Society of medicine, founded in 1943), and others.

In brief, Harris et al had four main research questions:

- How many perceived experts are there in the antivaccine and provaccine communities?

- How often do perceived experts in the antivaccine community share misinformation and academic sources relative to other users?

- Do perceived experts occupy key network positions (i.e. as central and bridging users)?

- Are perceived experts more influential than other individual users?

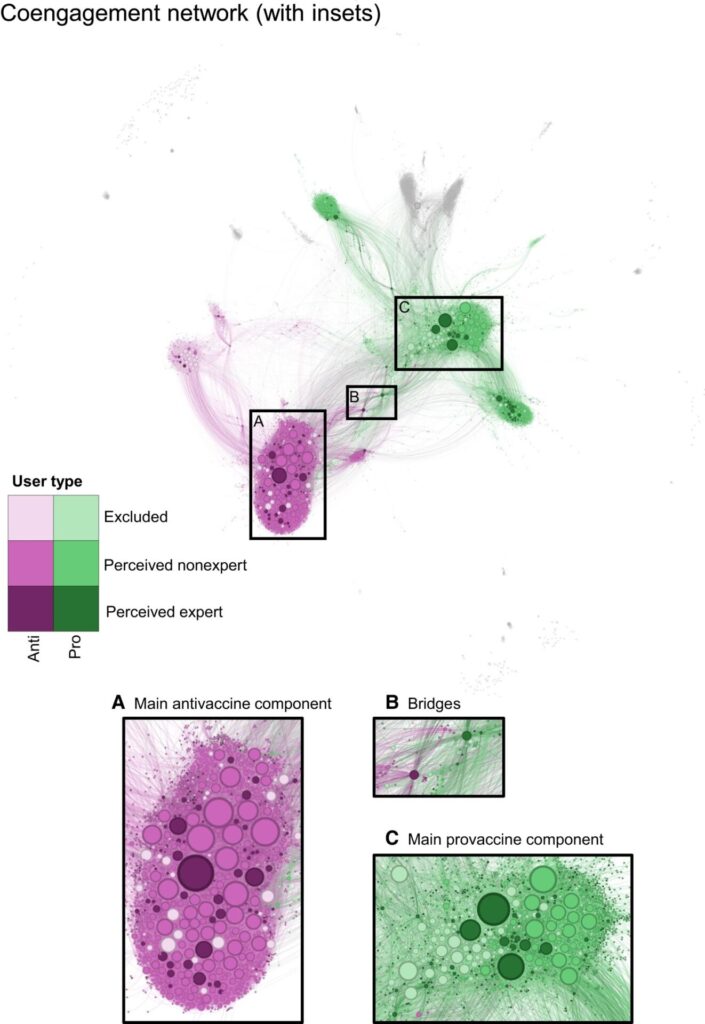

So, how did Harris et al attempt to quantify the influence of antivax physicians and scientists? In brief, they looked at Twitter during specific time period (April 2021) and collected over 4.2 million unique posts to Twitter containing keywords about COVID-19 vaccines. They then constructed a coengagement network where users were linked if they their posts were retweeted at least ten times by at least two of the same users, meaning that they shared an audience. The resulting network looked like this:

The authors then used the Infomap community detection algorithm, which detected a divide in which one group generally posted Tweets with a positive stance supportive of COVID-19 vaccines and the other posted Tweets expressing a negative stance towards the vaccines. The authors, appropriately enough, labeled the first community as provaccine and the second as antivaccine, although the authors did note that the stance “toward COVID-19 vaccines in popular tweets by users with the greatest degree centrality was relatively consistent, meaning that very few users posted a combination of tweets that were positive and negative in stance, although most users posted some neutral tweets as well” and that stances were “generally shared within communities; popular nonneutral tweets by central users in the antivaccine and provaccine communities almost exclusively expressed negative and positive stances, respectively, with a few exceptions.”

Another interesting finding:

The provaccine community was larger than the antivaccine community (3,443 and 2,704 users, respectively). Perceived experts were present in both communities, but constituted a larger share of individual users in the provaccine community (17.2% or 386 accounts) compared to the antivaccine community (9.8% or 185 accounts) (Fig. 3).

I say “interesting finding” because it often seems as though the antivaccine social media community is much larger than the provaccine community, although the authors’ finding that there were more perceived experts in the provaccine community as a percentage of the total community than in the antivax community does jibe with my “gestalt” over social media. That being said, these antivax doctors tend to be very influential.

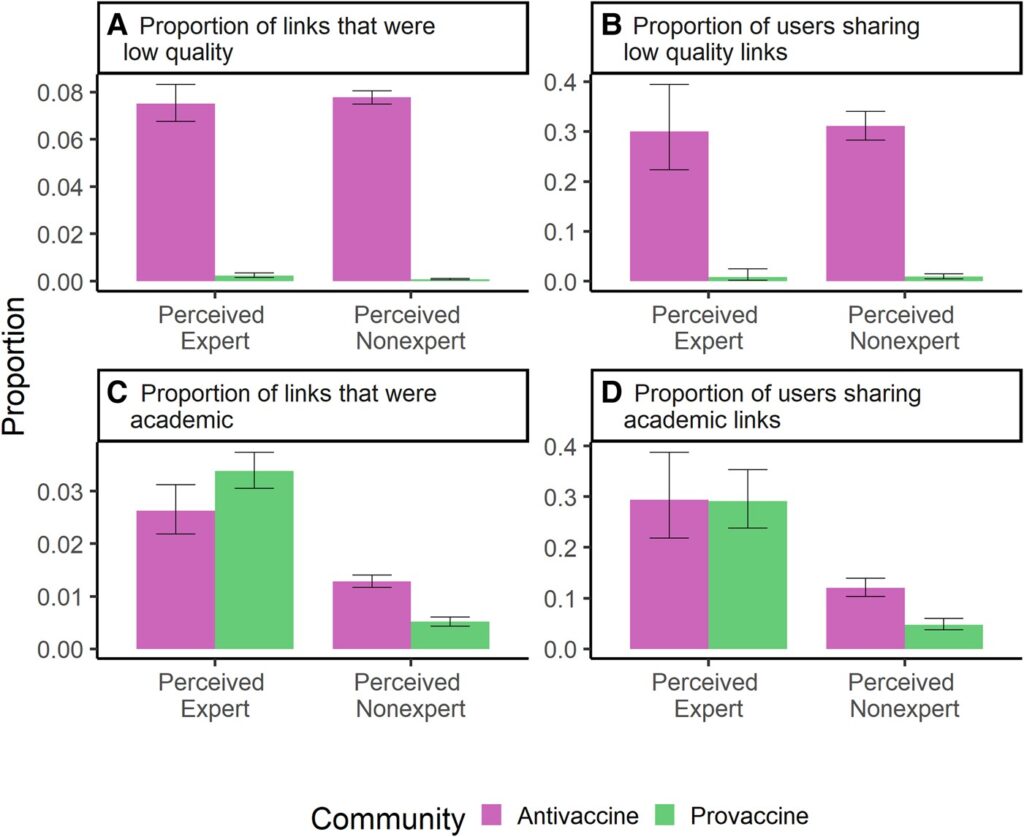

Another finding that jibes with my experience is that perceived experts in the antivax community frequently share articles from poor quality sources compared to what perceived experts in the provaccine community share. Actually, unsurprisingly, both nonexperts and perceived experts in the antivax community share poor quality sources:

Looking at this figure, I was rather surprised at the low percentage of sources that perceived experts shared that were academic links—in both communities. On the other hand, if you’re trying to influence a lay public, you probably don’t want to be sharing a lot of articles that your target audience might not understand. Be that as it may, it turns out that perceived experts tend to be more influential on social media as measured by their positions as bridging and central users. Moreover, a key observation is that perceived experts in the antivax community appear to share poor quality sources (e.g., domain names that repeatedly fail fact checks) at a frequency indistinguishable from the perceived nonexperts in the same group. I will note that the authors didn’t look at how the academic links shared by the perceived experts in each community were used, but will simply reiterate how in general I have often observed antivax physicians either cherry picking studies and ignoring contradicting evidence, misinterpreting studies to support conclusions the studies don’t support, and/or amplifying bad science done by disreputable “scientists” or physicians published in questionable journals.

As I like to say, the antivax movement in April 2021 could be characterized as the same as it ever was, only more so than before the pandemic. Also remember that April 2021 was only four months after the first COVID-19 vaccines started to be distributed after the FDA granted them emergency use authorization (EUA) in December 2020.

Perceived experts were found to be overrepresented among users with the greatest betweenness, degree, and PageRank centrality in both communities, with the terms meaning (taken from the Supplement):

- Degree centrality. Number of times a given node appears along the shortest path between two other nodes in the network, which selects users who share audience with users that otherwise do not have much overlap in their audiences.

- Betweenness centrality. Number of edges connecting a given node to other nodes, which selects users who share an audience with many other users; serves as a proxy for audience size.

- Page rank centrality. Recursively assigns nodes a value based on whether they are connected to other nodes with high PageRank centrality, which selects users who share audiences with many other users whose audiences overlap with those of many others in the coengagement network.

- Community bridging. Takes the minimum of the number of edges that a node has connecting it to nodes in any focal communities, which selects users who share an audience with relatively many users in both the anti- and pro-vaccine communities.

It turns out that perceived experts were overrepresented among the users with the highest degree of betweenness centrality, community bridging, as well as degree and PageRank centrality. Perceived experts were overrepresented by a factor of around two in the group of 500 and 50 most central users in the antivax community.

Moreover, when it came to engagement:

Perceived experts in the antivaccine community were 1.43 (95% CI 1.02–1.99) times more likely to receive retweets on their median post than would be expected if they were not perceived experts (Fig. 5 and Table S6). There was no significant effect of perceived expertise on median likes, but there was a significant effect of perceived expertise on h-index for likes and retweets (Fig. 5 and Table S6). Perceived expertise did not significantly affect centrality (betweenness, degree, and eigenvector) within the antivaccine community on average (Fig. 5 and Table S6), although we found in the previous section that perceived experts were overrepresented in the tail of the distribution as highly central users compared to the full (unmatched) set of perceived nonexperts (see Section S9 and Fig. S19).

The authors also compared the size of the influence boost one gets from being a perceived expert in the anti- and provaccine community and found no significant difference, leading them to conclude that perceived experts are roughly similarly important and influential in both communities. What this means is that our profession is simultaneously the most potent tool to counter misinformation as well as to spread it, all because of our status in society, which in this case translates generally to influence on social media.

To me, perhaps the scariest finding discussed is how 28.9% of perceived experts in the two largest communities of the coengagement network examined by Harris et al were part of the antivax community, which is a proportion similar to those reported by other studies of COVID-19 vaccine attitudes expressed by medical professionals on Twitter cited in the discussion (e.g., this study). As the authors note, this suggests that perceived experts who are antivax are overrepresented on Twitter compared to their actual proportion of actual experts; i.e., physicians and scientists.

Moreover unlike Harris, I was not surprised that antivax “experts” were sharing scientific articles online. For instance, in an interview, Harris answers a question about which of the study’s findings they found most surprising:

We saw perceived experts in both the pro-vaccine and the anti-vaccine community sharing many more scientific articles than non-experts. So these perceived experts in the anti-vaccine community did not just have credentials in their names — they were behaving in many of the same ways as their counterparts in the pro-vaccine community, such as discussing scientific papers.

That’s exactly what I’ve been seeing antivaccine “experts” do online for well over two decades, starting with Usenet, moving to websites and blogs, and then becoming active on social media.

What to do?

So we have a study that suggests that perceived experts, particularly physicians and scientists, are much more influential about COVID-19 vaccines than the average Twitter user, or, at least this was the case in April 2021. Unfortunately, that was before Elon Musk bought Twitter. I say “unfortunately,” because the functional changes made to Twitter, leaving aside the asinine renaming the platform “X,” make a research project like this well nigh impossible to undertake now without great expense. Moreover, given the deterioration of the platform, it wouldn’t surprise me if the situation is much worse now than it was nearly three years ago. Thus, there are a lot of limitations to this study, including that it only looked at individuals discussing COVID-19 vaccines during a given month early during the vaccine rollout, was mainly US-centric and based on English-language Tweets, and used Tweet engagements as an indicator of Tweet popularity (which may lead to an underestimate the true reach of content). Also, Twitter users are most definitely not broadly representative of the general population. Even so, these findings do suggest some issues with commonly believed strategies to combat medical misinformation:

Educational interventions that encourage trust in science could backfire if individuals defer to antivaccine perceived experts who share low-quality sources (76). Instead, education efforts should focus on teaching the public about the scientific process and how to evaluate source credibility to counter the potentially fallacious heuristic of deferring to individual perceived experts (26, 76–78). Although the sample of perceived experts in this study is not representative of the broader community of experts, surveys have found that a nonnegligible minority of medical students and health professionals are vaccine hesitant and believe false claims about vaccine safety (71, 79, 80). Given that perceived experts are particularly influential in vaccine conversations (Figs. 3–5) and that healthcare providers with more knowledge about vaccines are more willing to recommend vaccination, efforts to educate healthcare professionals and bioscientists on vaccination and to overcome misinformation within this community may help to improve vaccine uptake (81).

(I wrote about one of the studies regarding the prevalence of vaccine hesitancy and antivax views among physicians way back in April 2022, by the way.)

Also, Harris said elsewhere:

We have to go a level deeper and question who these people we’re listening to are. What exactly are they an expert in? Have they published on this subject? Might they have financial or political conflicts of interest? We can also try to understand an individual’s reputation in their scientific community.

All of which are a good start, but not sufficient. If there’s one thing that the pandemic has taught us, it’s that physicians and scientists with stellar reputations can break bad. Again, examples that I routinely cite include John Ioannidis, who, while not being antivaccine, has promoted very bad takes on the pandemic and how to control it through “natural herd immunity“; Dr. Vinay Prasad, who before the pandemic was a respected oncologist but now promotes borderline antivax, if not outright antivax, takes on vaccinating children and boosting adults against COVID-19 to the point of finding some of Robert F. Kennedy, Jr.’s antivax takes compelling; or Dr. Jay Bhattacharya, who has probably by now gone way too far down the antivax quack conspiracy rabbit hole to be salvageable.

I’ve long pointed out that an MD, PhD, or other title doesn’t make one any less human and, as a result, MDs, PhDs, and other experts are just as susceptible to believing pseudoscience and conspiracy theories as nonexpert lay people. As much as we physicians would like to view ourselves as immune—or at least more resistant—to the effects of ideology, emotion, and belief that do not flow from science and reason, we are not. Indeed, it is hubris on our part to think that we are less susceptible to unreason than anyone else, but that doesn’t stop us from thinking that. Worse still, because we as a group tend to think of ourselves as more reasonable and less susceptible to conspiracy theories, misinformation, and disinformation than the average person, when we do fall for them we tend to fall hard, as in very hard. (I’m talking to you, Dr. Peter McCullough and Dr. Pierre Kory, to cite two prominent examples.) Worse still, because we as a group tend to be highly intelligent, we tend to be very, very good indeed at motivated reasoning. Specifically, we are very skilled at asymmetrically seeking out evidence and arguments that support our pre-existing beliefs and finding flaw in evidence and arguments that challenge those beliefs, all while reassuring ourselves that our training as physicians means that our beliefs about medicine are all strictly based on science. That and the public perception of us as experts are two big reasons why we’re so influential on social media when we discuss COVID-19 vaccines. Also, we tend as a group to be overconfident and very good at weaponizing the techniques of scientific analysis in order to “reanalyze” data or “visualize” it in a different and misleading way.

This is a big reason why I emphasize that you need to learn how to tell a likely reliable expert from a quack and try to educate our readers about the warning signs. I grant you that it’s not always easy, as there will always be gray areas. However, most of the time we are not dealing with gray areas, and much of the time distinguishing a legitimate medical expert from an antivax quack can be surprising straightforward. There are a number of big “tells,” such as echoing and amplifying obvious conspiracy theories, claiming that a single treatment cures multiple unrelated diseases, portraying an intervention as unrelentingly awful, and the like. Perhaps the single most important “tell” that identifies a physician or scientist is hostility towards a scientific consensus—or, often, hostility towards even the concept of scientific consensus, as I have described in detail multiple times.

Think of this as just another part of pre-bunking, the technique of communicating common (and fairly easily predicted) narratives of misinformation in order to “head it off at the pass,” so to speak. Part of pre-bunking is learning to recognize who is and is not a reliable expert source of information about a topic.

We also need to clean up our profession

It’s long been a theme on this blog that medicine as a profession and the governmental bodies that regulate it, such as state medical boards, have failed miserably at the task of policing medicine and protecting patients (and the public) from quacks and misinformation-spreading social media influencers. For nearly the entire 16 year history of this blog we have lamented how state medical boards are underfunded, understaffed, and lack the legal tools (and, in some cases, the will) to truly protect the public from rogue doctors. In particular, they are reluctant to judge when one of our own is a quack or to do anything that appears to be “policing speech,” never mind that antivax and quack speech nearly always goes hand-in-hand with unscientific practices that can be considered quackery. Nor is this a new problem, either.

I like to cite the case of Dr. Rashid Buttar, whose story began with an attempt by the North Carolina Board of Medical Examiners to discipline him for his use of chelation therapy to treat autism and cancer. During the proceedings, Dr. Buttar famously (to us, anyway) called the Board a “rabid dog.” Ultimately, however, instead of permanently revoking Dr. Buttar’s medical license, the Board gave him a slap on the wrist, telling him that he could no longer treat children or cancer patients, rather than stripping him of his license. This lead Dr. Buttar to become a “pioneer” in what has become an all-too-common and distressing tactic among quacks since COVID-19: Attacking state medical boards and any other medical authority trying to rein in quacks and misinformation. His was the template that COVID-19 disinformation spreading doctors followed. Dr. Buttar and his allies persuaded the state legislature to alter North Carolina state law to make it more quack-friendly by mobilizing public support to neuter the North Carolina Medical Board and prevent it from disciplining doctors who practice quackery associated with “integrative medicine.” Specifically, the 2008 law, passed due to the advocacy of the North Carolina Integrative Medical Society and Dr. Buttar, prevents the medical board from disciplining a physician for using “non-traditional” or “experimental treatments” unless it can prove they are ineffective or more harmful that prevailing treatments. Again, this was the template that became the norm during the pandemic and was used to attack state medical boards that tried to discipline physicians for promoting COVID-19 misinformation.

I realize that the politics have become very toxic, but some of this is a self-inflicted wound, due to the long history of what Dr. Val Jones dubbed—in 2008!—”shruggies,” doctors who recognize quackery among their peers but don’t do anything about it, simply shrugging their shoulders (hence the name). Moreover, if a large majority of doctors favored disciplining our own for spreading antivax misinformation, lies, and conspiracy theories, legislatures would listen. Instead, our profession is too concerned with protecting its financial status and its “freedom of speech,” the latter of which quacks appeal to in order to persuade doctors who might find them reprehensible to stay on the fence, at minimum. Until we as a profession recommit ourselves to dedication to science-based medicine, to countering quack narratives, and, most importantly, to cease tolerating the quacks (antivax or other) among our members, the problem will persist and likely continue to get worse.

This article has been archived for your research. The original version from Science Based Medicine can be found here.