How Meta, TikTok, YouTube and X are moderating election threats

Happy Election Day! Surely everything will go smoothly this time, right? Send news tips to: will.oremus@washpost.com.

Here’s how Meta, TikTok, YouTube and X are moderating election threats.

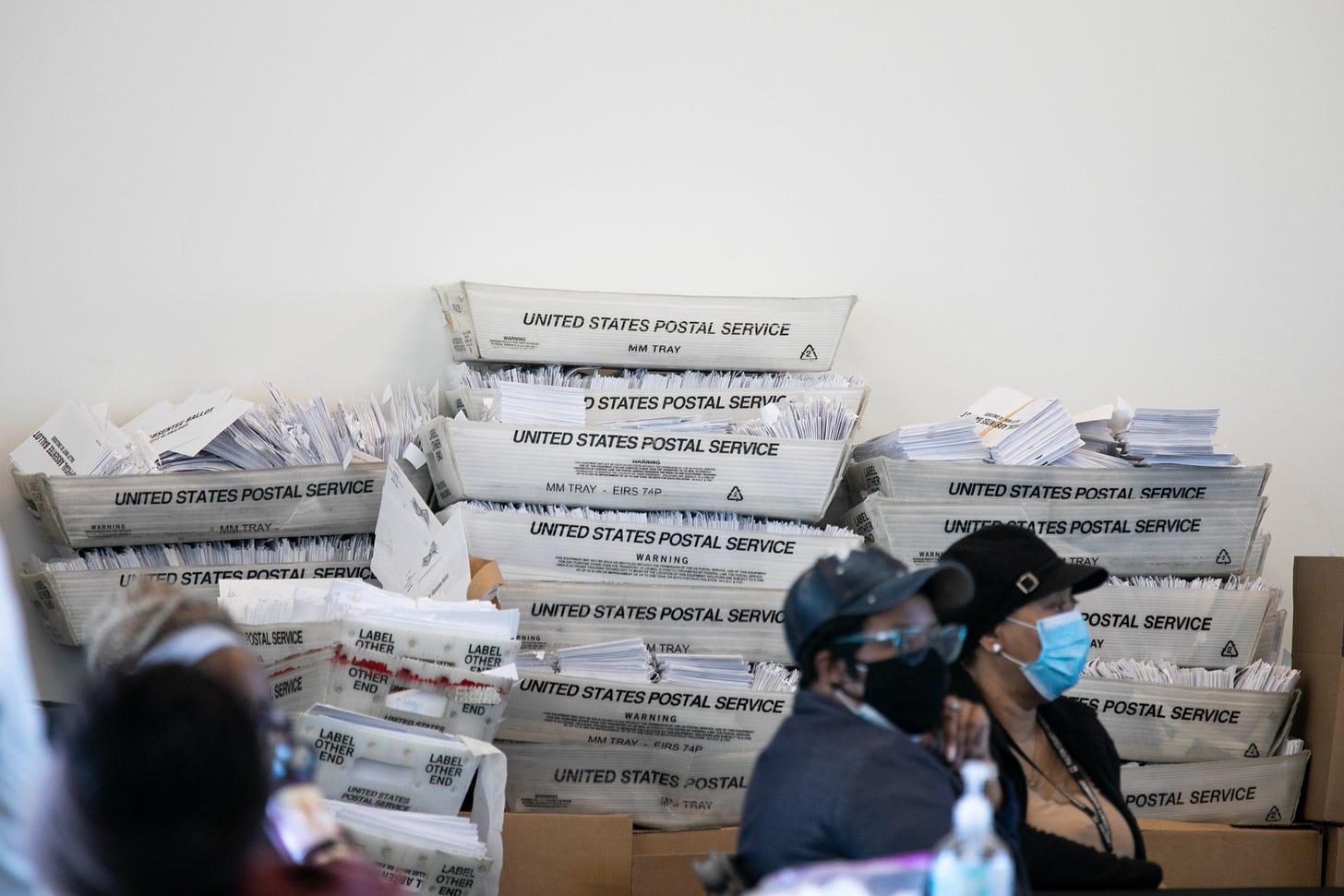

Four years after the major social media platforms took unprecedented steps to crack down on falsehoods in the wake of the 2020 presidential election, their policies have eased and their vigilance has faded, as your guest co-host Naomi Nix and our colleague Cat Zakrzewski reported on Saturday. In the meantime, the “Stop the Steal” movement that began on the far-right fringe has evolved into a coordinated, sophisticated effort, as Nix, Zakrzewski and Drew Harwell reported Monday.

Heading into today’s election, the most influential social networks — including Meta’s Facebook, Instagram and Threads, Google’s YouTube, ByteDance’s TikTok and Elon Musk’s X — have policies and plans in place for moderating election threats and misinformation. Some categories of speech remain prohibited on all of them, including posts that mislead voters about how to vote, who can vote, or the laws and procedures around the election. All four companies also ban posts that seek to intimidate voters, which is against the law.

But no two are taking exactly the same approach, creating a confusing information landscape in which the same claim might be allowed by one platform and restricted by another. To understand the key similarities and differences, Tech Brief asked representatives of Meta, TikTok, YouTube and X to clarify their rules and their plans to enforce them.

Meta

Meta’s approach sticks out in how it handles the “big lie” — the idea that widespread voter fraud cost Donald Trump the 2020 election and threatens a 2024 Trump win. Meta allows political advertisers to claim that the 2020 election was rigged but bans ads questioning the legitimacy of an upcoming election. Users and politicians can make voter fraud claims in their posts, but the company may take action if they cite conspiracies that have been debunked by fact-checkers, include calls for violence or otherwise harass election workers.

The social media giant also shifted its approach to political content across its social networks, de-emphasizing overtly political posts and accounts in its recommendations. And Meta has done less than in previous cycles to promote accurate information about the voting process in its voter information center on Facebook.

Other aspects of Meta’s approach to policing election issues are more mysterious. In 2020, Meta, then called Facebook, said it would add a label to any post from candidates who say they won before the election results are in, instead directing users to results from Reuters and the National Election Pool. Since then, Meta has said it will apply only labels it deems necessary and has declined to elaborate on how it will handle premature declarations of victory.

TikTok

TikTok’s future in the United States is uncertain as it challenges a law requiring it to be sold or banned. Still, it has played a bigger role in the 2024 election than in any previous cycle. It remains the only one of the four platforms that prohibits political advertising (though some slips through anyway). But it has become a battleground for a murkier brand of political influence campaign, with creators taking money from political action committees and dark money groups to support a candidate to their followers — often without disclosing it.

The company’s policies on civic and election integrity prohibit misinformation about election procedures or electoral outcomes, including claims that Trump won in 2020. As for premature claims of victory, unverified claims of voting irregularities, or other potentially misleading election content, TikTok says they may be ineligible for promotion in users’ feeds until they are reviewed by the company’s independent fact-checking partners. A January report from NYU’s Stern Center for Business and Human Rights found that TikTok’s “tough-sounding policies” were undermined, however, by “haphazard enforcement” that “has failed to slow the spread of deniers’ lies.”

Google/YouTube

YouTube announced last year that it would stop removing videos that claim the 2020 election was rigged — a policy the company first enacted as the Stop the Steal movement gained traction. Though YouTube has reversed course in an effort to preserve political speech, the company says it still removes content that encourages others “to interfere with democratic processes.” That could mean videos that instruct viewers to hack government websites to delay the release of the results, purposely create long lines at a polling location to make it harder to vote or call for the incitement of violence against election officials.

The search giant has some specific plans for Election Day. Google will put a link to its real-time election results tracking feature at the top of search results for campaign-related queries. The results tracker, which relies on the Associated Press for information, would also added to election videos. After the last polls close on Nov. 5, Google will also pause all ads related to U.S. elections — a blackout the company says could last for a few weeks.

X

Under Musk, X has narrowed the categories of speech that violate its rules, reduced the resources it devotes to enforcing them, and reduced the penalties for breaking them. At the same time, Musk has emerged as a leading backer of Trump and the push to highlight alleged instances of voter fraud.

X told Tech Brief the company will be enforcing its civic integrity policy, which prohibits voter suppression and intimidation, false claims about electoral processes and incitements to real-world violence. X will also enforce its policies on platform manipulation, synthetic media, deceptive account identities and violent content. It will “elevate authoritative voting information” on users’ home timeline and via search prompts, and it will encourage users to add context to one another’s posts using the site’s crowdsourced fact-checking feature, Community Notes.

Still, those policies are the most minimal among the major platforms. Posts that violate the civic integrity rules will be labeled as misleading, but not taken down, and the policy gives no indication that users’ accounts will face consequences. X also does not partner with independent fact-checking organizations to flag or label false claims.

Musk is encouraging posts that call into question the election’s integrity. Last week, he invited his 200 million followers to join a special hub on the site, run by his pro-Trump lobbying group America PAC, devoted to sharing “potential incidents of voter fraud and irregularities,” many of which have turned out to be false or unfounded.

Some experts fear the platforms’ policies will fail to contain an expected flood of falsehoods on election day and beyond.

In a guide to platforms’ post-election policies by the nonprofit Tech Policy Press, a group of researchers and analysts noted that there have been some “laudable” changes since 2020, with several platforms now prohibiting threats against election workers. However, they said the weakened prohibitions on misinformation at Facebook, YouTube and X are “worrying,” because they “can serve to delegitimize election outcomes and contribute to violence.”

Katie Harbath, CEO of the tech consultancy Anchor Change and a former Facebook public policy director, has been tracking the platforms’ election plans for months, including those of smaller platforms and messaging apps such as Telegram and Discord. She predicted that no single company will have an outsize impact on the election, because the information environment has become so fragmented.

“I’m most worried about those platforms such as X where Musk has already proven to welcome content that might provoke violence,” Harbath said.

The environment as a whole “seems much worse than it was at the same time in 2020,” added Paul M. Barrett, deputy director of NYU’s Stern Center. “Gasoline is splashing everywhere, and I just fear the matches will be thrown and God help us.”

Government scanner

Pete Buttigieg was once mocked as friendly to corporations. Then he became transportation secretary. (Politico)

Elon Musk’s PAC admits $1 million voter giveaways aren’t ‘random’ (The Verge)

OpenAI in talks with California regulators to become for-profit company (Bloomberg)

Facebook, Nvidia ask Supreme Court to spare them from securities fraud suits (Reuters)

Nuclear power companies hit by U.S. regulator’s rejection of Amazon-Talen deal (Wall Street Journal)

Meta opens Llama AI models to U.S. defense agencies, contractors (Bloomberg)

Privacy Monitor

Columbus says ransomware gang stole personal data of 500,000 Ohio residents (TechCrunch)

Inside the industry

Four years after ‘Stop the Steal,’ an organized army emerges online (Drew Harwell, Cat Zakrzewski, and Naomi Nix)

What’s in your TikTok feed? As elections near, it may depend on gender (Jeremy B. Merrill, Cristiano Lima-Strong and Caitlin Gilbert)

Right-wing groups are organizing on Telegram ahead of Election Day (New York Times)

Workforce report

New York Times tech workers go on strike ahead of election (New York Times)

Perplexity CEO offers to replace striking NYT staff with AI (TechCrunch)

Uber proposed cut to NYC driver base pay on lower gas prices (Bloomberg)

Trending

A deepfake showed MLK Jr. backing Trump. His daughter calls it ‘vile.’ (Pranshu Verma)

Make election week less stressful, without putting down your phone (Heather Kelly)

Daybook

- The Chartered Institute for IT hosts a virtual webinar, “November Policy Jam,” Wednesday at 12 p.m.

- The Internet Society hosts a virtual webinar, “2024 Youth Ambassador Program Symposium,” Thursday at 8 a.m.

- The Hoover Institution hosts an event, “Critical Issues in the US-China Science and Technology Relationship,” Thursday at 4 p.m.

- The Atlantic Council hosts a panel, “Veterans and Cyber Workforce,” Friday at 11 a.m.

Before you log off

That’s all for today — thank you so much for joining us! Make sure to tell others to subscribe to Tech Brief. Get in touch with Cristiano (via email or social media) and Will (via email or social media) for tips, feedback or greetings!