The dark side of memes: How conspiracy groups hijack humor to build ranks, spread misinformation

(Photo by Grenar on Shutterstock)

BATH, England — Behind every viral meme lurks a potential dark side. While most of us share funny images to make friends laugh, a new study reveals how conspiracy theory communities weaponize memes to spread misinformation and strengthen their collective beliefs. The research from the University of Bath shows how these seemingly harmless viral images become powerful tools for reinforcing dangerous ideologies.

This study, published in Social Media + Society, looked at over 500 memes shared across two COVID-19 conspiracy theory communities on Reddit between 2020 and 2022. Researchers uncovered how these online groups strategically use standardized meme templates and characters to reinforce their beliefs and create a shared identity.

“We see from this study that memes play a significant role in reinforcing the culture of online conspiracy theorist communities,” explains lead researcher Emily Godwin from Bath’s Institute for Digital Security and Behaviour (IDSB), in a statement. “Members gravitate towards memes that validate their ‘conspiracist worldview’, and these memes become an important part of their storytelling. Their simple, shareable format then enables the rapid spread of harmful beliefs.”

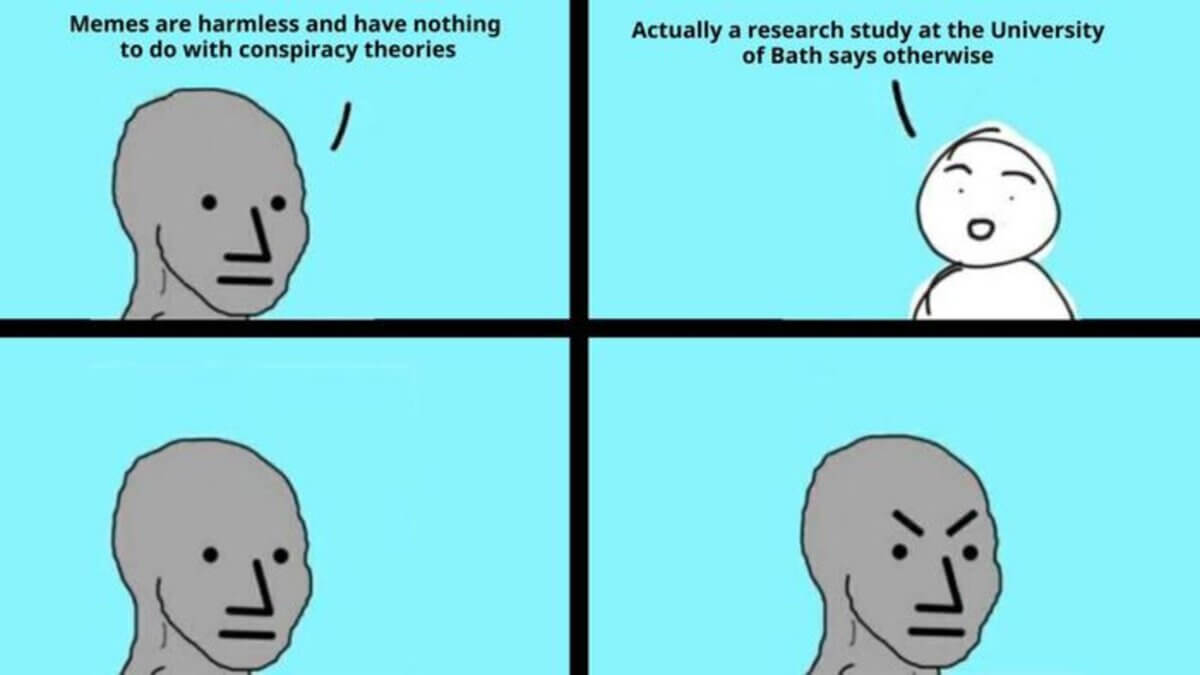

The researchers identified the most frequently used meme formats across these communities. At the top of the list was “NPC Wojak,” a gray, expressionless figure meant to represent someone who lacks independent thought, like a non-playable character (NPC) in a video game. The sixth most popular format was “Drakeposting,” featuring rapper Drake from his 2015 Hotline Bling music video to show approval or disapproval. “Distracted Boyfriend” ranked tenth, showing a man turning away from one option toward another.

Communities repeatedly used these meme formats to communicate three main themes: deception (portraying authority figures as manipulative), delusion (mocking those who accept mainstream narratives), and superiority (positioning conspiracy believers as enlightened truth-seekers). For instance, one meme showed the NPC character acknowledging that flu rates were low due to mask-wearing while simultaneously blaming COVID-19 spread on people not wearing masks in an attempt to highlight perceived logical contradictions in mainstream views.

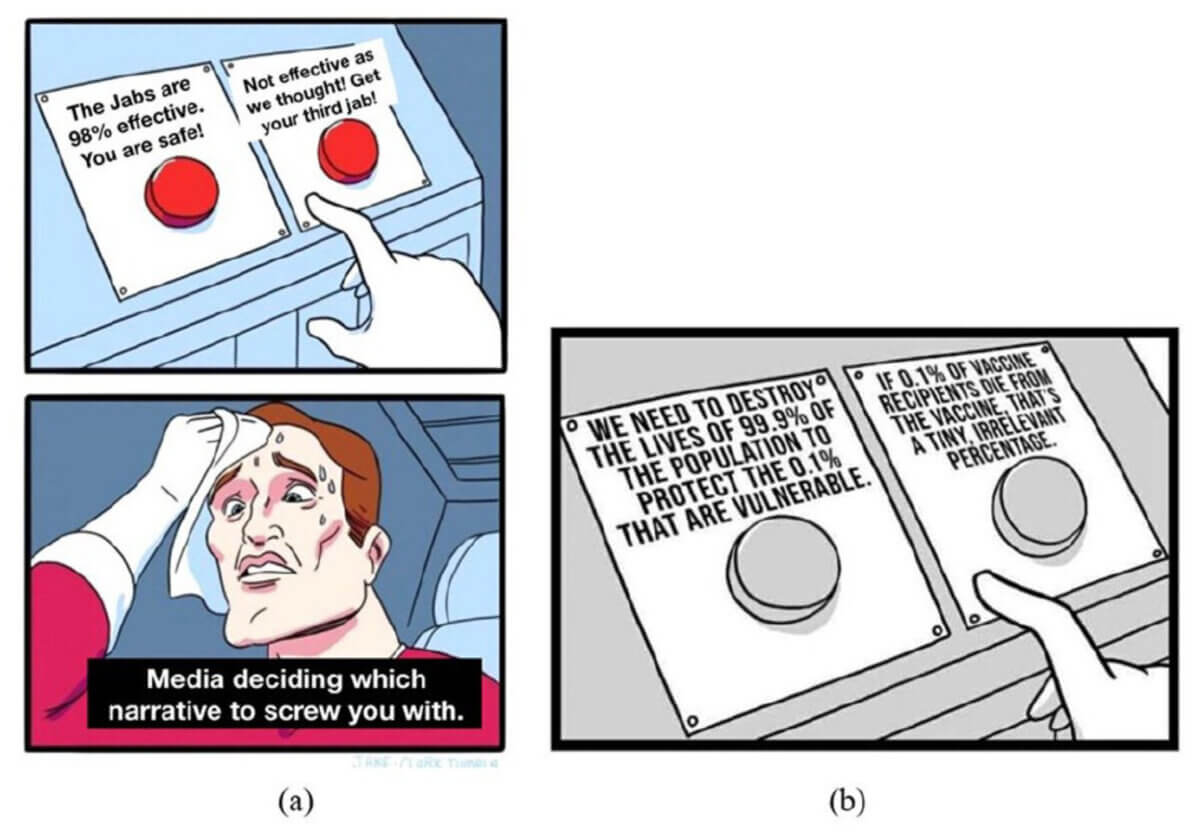

These communities also heavily employed the “Daily Struggle/Two Buttons” meme template, which shows a superhero agonizing over two opposing choices, to mock what they saw as hypocritical messaging from health authorities. For example, one meme presented two contradictory buttons labeled “The jabs are 98% effective. You are safe!” versus “Not as effective as we thought! Get your third jab!” The “Distracted Boyfriend” format was repurposed to criticize how scientific decisions were allegedly influenced by profit motives rather than public welfare.

University of Bath)

What makes this study particularly interesting is how these communities used memes more like standardized symbols than creative expressions. Just as a red stop sign means the same thing everywhere, these communities developed their own set of consistent visual signals.

“The humor of memes, typically based on the ridicule and mockery of hypocritical elites and the public, is likely a key driver in attracting new members to these groups, including people who may be unaware of the full context and impact of misinformation,” says co-author Dr. Brit Davidson, Associate Professor of Analytics at IDSB.

The research also revealed how these communities often aligned themselves with right-wing rhetoric, particularly in their criticism of COVID-19 measures. When Russia invaded Ukraine in 2022, some members used memes to suggest this was another orchestrated crisis, demonstrating how their conspiracy mindset extended beyond the pandemic. For instance, one meme showed the NPC character being “programmed” with successive crises, from COVID-19 to Ukraine.

While scrolling through your social media feed, it might be easy to dismiss memes as harmless entertainment. But this research reveals how the same meme formats you use to share jokes with friends can be repurposed to spread misinformation and unite conspiracy communities. Understanding this dual nature of memes could help us become more conscious consumers of online content. What might seem like a silly joke in one context could become a tool for spreading dangerous ideas in another.

Paper Summary

Methodology

The researchers analyzed 544 memes shared across two related conspiracy-focused subreddits about COVID-19. They collected posts from r/NoNewNormal (from June 2020 until its ban in September 2021) and r/CoronavirusCirclejerk (from October 2020 to November 2022). Reddit was specifically chosen as a research site because it’s a widely used discussion platform and known hotspot for conspiracy theories. The research team identified recurring standardized meme templates and characters, then conducted both content and thematic analysis to understand what narratives these memes conveyed and what cultural themes they represented.

Results

Out of 544 memes analyzed, 308 used standardized meme templates or characters, with 200 memes using 49 recurring elements. The most popular format was “NPC Wojak,” followed by “Daily Struggle/Two Buttons” and “Soyjaks vs. Chads,” with “Drakeposting” ranking sixth and “Distracted Boyfriend” tenth. The analysis revealed three main cultural themes: Deception (portraying authorities as manipulative), Delusion (mocking those accepting mainstream narratives), and Superiority (positioning conspiracy believers as enlightened truth-seekers).

Limitations

The research focused only on two specific COVID-19 conspiracy subreddits, so findings might not represent all conspiracy communities. Additionally, the data collection from r/CoronavirusCirclejerk was limited to “top” posts, preventing direct comparisons between the two subreddits. The researchers suggest that future studies could examine how other digital elements like emojis, hashtags, online rituals, and community-specific jargon play similar roles in conspiracy communities.

Discussion and Takeaways

The study reveals how memes function as “cultural representations” that help stabilize online conspiracy communities. Rather than being primarily creative or humorous, memes in these spaces serve as standardized cultural resources that reinforce shared beliefs and maintain group cohesion. The research team at Bath’s Institute for Digital Security and Behaviour (IDSB) emphasizes that understanding these dynamics is crucial as digital expression continues to evolve. Their findings suggest that memes play a more complex role in online communities than previously recognized, particularly in how they can facilitate the spread of harmful beliefs while maintaining group unity.

Funding and Disclosures

The research was partially funded by the Engineering and Physical Sciences Research Council (EPSRC) through a PhD studentship to Emily Godwin via the CDT in Cybersecurity at IDSB. The funders had no role in study design, data collection and analysis, decision to publish, or manuscript preparation. The study is part of the broader research agenda of the University of Bath’s Institute for Digital Security and Behaviour, which launched in January 2025.

Publication Information

The study, titled “Internet Memes as Stabilizers of Conspiracy Culture: A Cognitive Anthropological Analysis,” was published in Social Media + Society (January-March 2025). The research team includes Emily Godwin, who is completing her Ph.D. at the University of Bath’s IDSB and serves as a Senior Research Associate at the University of Bristol, along with co-authors Dr. Brittany I. Davidson, Tim Hill, and Adam Joinson from the University of Bath’s School of Management.