Anatomy of a conspiracy theory: how misinformation travels on Facebook

In the early hours of New Year’s Eve, former Liberal MP Craig Kelly logged on to Facebook to make one of his regular contributions to the global network of misinformation about Covid-19 – this time, to promote the antiparasitic drug ivermectin as a treatment for the virus.

The content of the post was not particularly new or remarkable. For months, Kelly had faced increasing criticism from health experts for promoting the use of drugs such as ivermectin and hydroxychloroquine as coronavirus treatments, against scientific evidence.

This one erroneously claimed ivermectin “beats all the experimental vaccines for efficacy in preventing Covid infections” by incorrectly comparing a series of minor studies to vaccine effectiveness data.

Dr Lea Merone, public health consultant and senior research fellow at the University of Queensland, said the problem with Kelly’s post was that it used studies about the drug with “extremely small sample sizes, meaning they used few people to trial this medication”.

“Sample sizes that are too small can lead us to believe we have made false discoveries and often when these studies are replicated on a larger scale with more participants, we don’t get the same results,” she said.

“It is important we don’t recommend treatments based on results from small-sample or pilot studies. The vaccines have been tested rigorously on thousands of people and have been shown to be effective and safe.”

Australia’s chief medical officer, Paul Kelly, has also rebuked the member for Hughes for promoting the drug, saying there was “no evidence” about its usefulness in treating Covid-19.

While the prime minister, Scott Morrison, refused for months to publicly reprimand Kelly for spreading misinformation, Kelly’s popularity on Facebook soared. Using his platform as – until recently – a government MP, he became a beacon for conspiracy theorists and anti-vaxxers.

On Kelly’s 31 December post, the comments were typical: followers claimed Covid-19 was a hoax orchestrated by the World Health Organization and the United Nations to “cull the population”, or a ploy by Bill Gates and pharmaceutical companies to profit from a vaccine.

“Surely most people who follow you now realise that the virus is a part of a greater plot that has nothing to do with our health at all?” one person wrote.

“It’s your constant posting … that has woken me up.”

Kelly strongly denied he had contributed to misinformation about the virus, and pointed to countries such as the Czech Republic and Slovakia, which have approved the use of ivermectin to treat the virus.

That’s despite its own manufacturer, MSD, warning against its use to treat Covid-19.

Kelly told the Guardian he believed the term “misinformation” was being used to “shut down debate” about the use of Covid-19 treatments such as ivermectin.

He also denied he had become a beacon for conspiracy thinking, saying most MPs attracted “crazies” on their posts, and said he was “posting the facts”. He said he was not concerned about comments on his page that suggested some people had come to believe Covid-19 to be a “hoax” because of his social media presence.

“The problem is, and where some of these people get these strange ideas from, is they see when I post things like this and they then see the media claiming Craig Kelly is posting misinformation and they say hang on a minute and they can see that Craig Kelly is posting facts. That is what perhaps incites some of the people to have these bizarre conspiracy theories.”

But the post reached an audience far beyond Kelly’s own following.

This is how a single post from an Australian politician spread to a global network of Facebook groups promoting anti-vaccine, anti-lockdown and coronavirus misinformation. It shows how the platform is uniquely suited to potentially spreading harmful content online.

This is just one post, but researchers from the Queensland University of Technology have looked at how conspiracy theories and misinformation spread on Facebook more broadly.

In a recent paper, Axel Bruns and his teamexamined the rise of the conspiracy theory thatCovid-19 is somehow related to the rollout of the 5G mobile phone network. Craig Kelly has not shared conspiracy theories about 5G.

The QUT team tracked Facebook posts from 1 January to 12 April 2020, and were able to illustrate the “gradual spread of rumours from the fringes to more popular spaces in the Facebook platform” over five distinct phases.

“There were half a dozen other threads of conspiracy that we could have chosen to look at that were emerging at this time, but we ended up choosing 5G, really because of the significant impact it has had in terms of the spate of arson and other attacks linked to it,” Bruns told the Guardian.

“It’s an obvious example of conspiracy theories leading to effects in the real world.”

The data showed not only the spread of 5G misinformation during the pandemic, but also the way it dovetailed with other pre-existing conspiracy theories such as vaccine misinformation. It also highlighted the role of celebrities and some celebrity and sports media in helping those theories reach the mainstream.

The Guardian used the QUT data to show how Facebook posts containing 5G-coronavirus conspiracy content went from reaching only a few thousand people to pages, profiles and groups with hundreds of millions of followers – potentially contributing to a spate of attacks on phone towers in Australia and across the globe.

The chart below uses data from public pages, groups and verified profiles only (which we’ll refer to as pages from here), and while it contains the number of shares, followers and similar metrics, it does not look at how this content is being shared by individual users.

After months of conspiracy content linking Covid-19 to the rollout of 5G, the online shifted to the real world. A wave of vandalism and destruction of 5G infrastructure began across the globe. By 30 April, ZDNetcounted 61 “suspected arson attacks” in the UK alone, with further attacks in the Netherlands, Belgium, Italy, Cyprus and Sweden.

The vandalism extended to Australia. On 9 May, there was a fire at a phone tower in Morphett Vale, South Australia. Investigators believe it was deliberately lit.

Then, in the early hours of 22 May, a fire broke out at a power and mobile phone tower in Cranbourne West in Melbourne’s outer suburbs. It is still under investigation by Victorian counter-terrorism police.

The attacks did not happen in a vacuum. After months of increasingly widespread misinformation about 5G and Covid-19, by May an anti-lockdown movement had begun to emerge in Melbourne. On 10 May, 10 people were arrested and one police officer taken to hospital after demonstrators gathered in the city’s CBD for a protest advertised as being against “self-isolating, social distancing, tracking apps [and] 5G being installed”.

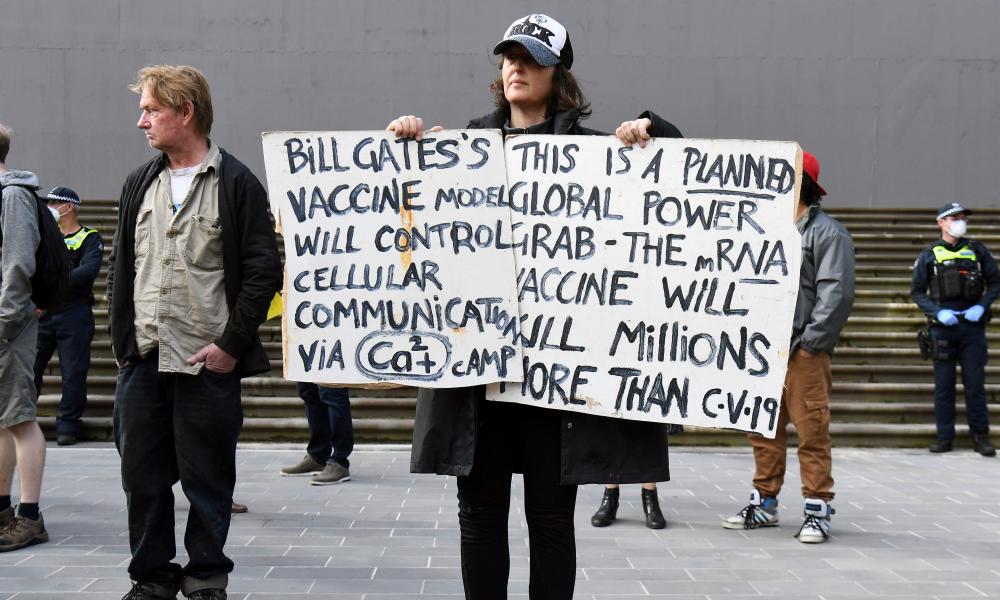

At the protest, many of the signs and slogans shouted by demonstrators fit the themes of misinformation found on Facebook by the QUT researchers in the early months of the pandemic. Demonstrators called for Microsoft founder Bill Gates to be arrested, a now-prominent call of conspiracy theorists that began to emerge as misinformation spread into new directions from late February.

In early May, the Australian celebrity chef Pete Evans, well known for his embrace of conspiracy theories during the pandemic, urged his then-231,000 Instagram followers to watch a three-hour interview in which the notorious conspiracy theorist David Icke simultaneously claimed Covid-19 is “a fake pandemic with no virus” and linked infections to 5G.

At the time, Evans wrote: “Here is an alternative view, I would be keen to hear your thoughts on this video as to whether their is any validity in this mans message, especially as there seems to be a lot of conflicting messages coming out of the mainstream these days.”

The QUT paper found celebrities such as Evans played a vital role in pushing misinformation about 5G technology during the pandemic.

“All of these things – 5G, anti-vaccination or things like Covid-19 as a bio-weapon – have all been meshed in together, which in some ways makes them much more powerful because there is something there for everyone,” Bruns said.

The development of this trend, in which – as the QUT researchers describe it – “a growing array of conspiracy bogeymen are variously said to be behind the outbreak”, helps to explain why by 2021 Melbourne’s anti-lockdown movement had evolved into something closer to an anti-vaccination conspiracy group.

While data shows that Australia’s pre-pandemic vaccination rates remain among the highest in the world, there is some evidence of those views seeping into more mainstream audiences. YouGov polling carried out in July and August last year found, for example, that 23% of Australians polled agreed with the statement that the coronavirus was “deliberately created and spread by some powerful forces in the business world”.

In a statement, a spokesperson for Facebook said the posts used by the Guardian to demonstrate the spread of misinformation on the social network “predates our expanded Covid-19 policies”.

“Today, we are running the largest online vaccine information campaign in history and so far have directed over 2 billion people to resources from health authorities through our Covid-19 information centre,” she said.

“We remove conspiracy theories about Covid-19 vaccines, including linking them to 5G hoaxes, have removed 12 million pieces of harmful misinformation about Covid-19 and approved vaccines, and work with 80 fact-checking organisations to debunk other false claims.”

At the time of publishing, all but one of the example posts used by the Guardian remained on Facebook. Only one had been given a “misinformation” filter tag by the company.

* The names marked with an asterisk in screenshots have been changed to avoid promoting accounts that share misinformation.