Facebook and the Group That Planned to Kidnap Gretchen Whitmer

This week, Facebook has been busy disabling itself.

On Tuesday, the company pledged to remove all pages, groups and Instagram accounts identified with the QAnon conspiracy movement — a move that will purge hundreds of groups and potentially many thousands of accounts.

On Wednesday, the company announced it would widen its ban on political advertisements indefinitely, starting after polls close on Election Day. The goal is to stop political candidates and groups from undermining the outcome of the election results and stem the inevitable flow of misinformation and disinformation.

Just how effective these bans will be depends on their implementation. Facebook’s record on this is spotty, but so far the takedowns seem comprehensive. Both decisions are steps in the right direction.

Still, taken together, Facebook’s pre-election actions underscore a damning truth: With every bit of friction Facebook introduces to its platform, our information ecosystem becomes a bit less unstable. Flip that logic around and the conclusion is unsettling. Facebook, when it’s working as designed, is a natural accelerating force in the erosion of our shared reality and, with it, our democratic norms.

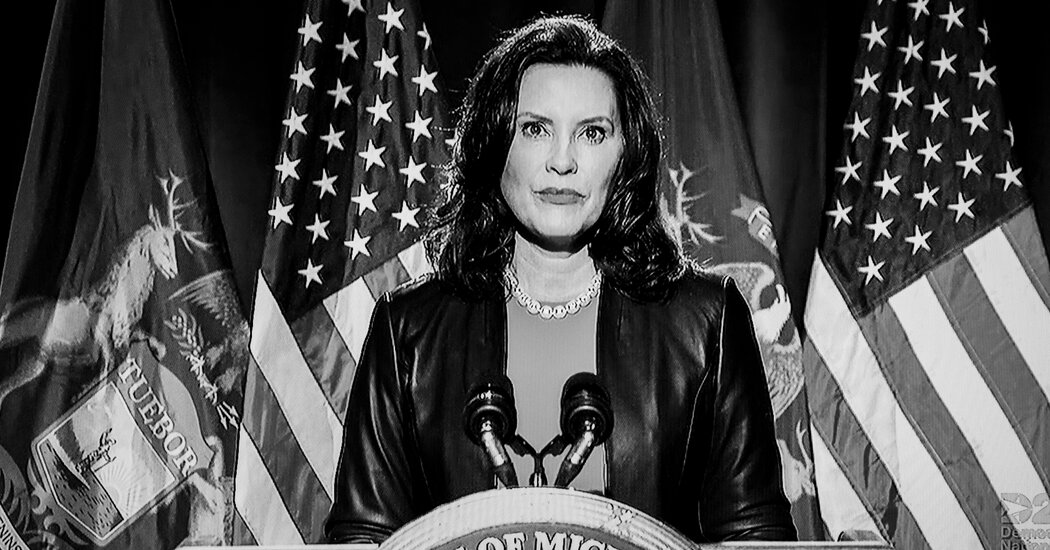

A good example of this appeared on Thursday in a criminal complaint released by the F.B.I. The complaint details a federal investigation that successfully stopped a plot to kidnap the Michigan governor, Gretchen Whitmer, and put her on “trial.” The investigation is the latest example of anti-government domestic terrorism among far-right extremists. The group also discussed plans to attack the Michigan State Capitol building in what the state attorney general, Dana Nessel, called an attempt to “instigate a civil war.”

The complaint mentions Facebook three times as one of the communications platforms that the group used to coordinate their activities. In one private Facebook group, the complaint alleges that one of the plotters railed against Governor Whitmer’s coronavirus public health restrictions. The plotters also “shared photos and video recordings” of their so-called militia training exercises in Facebook discussion groups, including their failed attempts to make improvised explosive devices. In late July, as the group prepared to carry out their plot against the governor, one of the group’s leaders shared progress reports in the private Facebook group.

Such posts are nothing new for Facebook, where extremists have long gathered and built sprawling private communities away from the watchful eyes of outside moderators and law enforcement. Extremism researchers and anti-extremist advocates have long warned that the groups are hotbeds of radicalization and influential vectors for disinformation and conspiracy theories.

The content generated inside and then amplified by these groups attempts to create political division and chaos. In August, Facebook took heat after reporters uncovered a so-called militia event page where participants discussed an armed response to the demonstrations in Kenosha, Wis. The company claimed it took action and removed the event page. But as BuzzFeed News reported later, it was actually the group that took its own page down after a 17-year-old gunman was charged in the deaths of two people during the protests.

Facebook has taken steps to crack down on so-called militias organizing on the platform, banning over 6,500 pages and groups in recent weeks. But the groups that slip through the cracks seem bent on fomenting political unrest and vigilante violence. Last Sunday, The Guardian reported on a network of far-right Facebook pages run by a father and son promoting extremist content and linking to posts “warning of a stolen election, and suggesting this will lead to civil war.” One of the Facebook pages, at the time of publication had nearly 800,000 followers.

This kind of extremism is not unique to Facebook. Violent extremists groups and dangerous conspiracy theorists existed well before the platform. Still, one can’t look at the instability born out of Facebook’s groups and pages and see them as inseparable from C.E.O. Mark Zuckerberg’s own product design. As I’ve written before, one can plausibly trace the growth of unmoderated extremist groups on Facebook partly to a 2017 speech where Mr. Zuckerberg announced that the company would use an artificial intelligence system to recommend “meaningful communities” to its users.

When Mr. Zuckerberg spoke of meaningful communities his examples were church groups and veterans’ support communities that made positive impacts on society. He got those, but alongside them the platform’s recommendation engines most likely supercharged the QAnon conspiracy movement. The platform’s quest to “bring the world closer together” also birthed “meaningful communities” for violent anti-government extremists looking to live-action roleplay a civil insurgency. Despite desperate pleas from activists and academics to curtail its recommendation engines, the platform appears committed to do the exact opposite. Last week, Facebook announced it would begin surfacing some public group discussions in people’s news feeds and search results.

Perhaps most galling is that Facebook — and those of us observing closely — have watched all this happen before outside the United States. Today’s epidemic of hyperpartisanship, disinformation and violent rhetoric leading to real world instability follows the same pattern of countries like the Philippines, Azerbaijan and Myanmar. Instead of learning from those examples, Facebook appears to be running a similar playbook. Knowing what we know, it seems foolish to expect a radically different outcome here at home.

Facebook’s design is not accidental. Near the beginning of his 2017 speech on community, Mr. Zuckerberg revealed part of his motivation to focus on groups and communities. “Every day, I say to myself, I don’t have much time here on Earth, how can I make the greatest positive impact?” he mused.

Normally, that’s a hard question for a company of Facebook’s size. But if Mr. Zuckerberg is still asking himself that question every morning, the answer right now should be crystal clear. Facebook itself seems to know what it ought to do. That’s why it is quietly, temporarily dismantling normally crucial pieces of its infrastructure in anticipation of a crucial moment for American democracy. It’s a tacit admission that what is good for Facebook is, on the whole, destabilizing for society.

How can Mr. Zuckerberg make the greatest positive impact? By introducing friction into a system designed to have as little friction as possible. By reorienting the way Facebook distributes information around values other than engagement and virality. In essence, by stripping out the parts of the platform that we’ve come to know as Facebook until it is unrecognizable. By breaking the platform that’s helping to break us.

*** This article has been archived for your research. The original version from The New York Times can be found here ***