COVID-19 Misinformation on Social Media: A Scoping Review

Social media is defined as “forms of electronic communication (such as websites for social networking and microblogging) through which users create online communities to share information, ideas, personal messages, and other content (e.g., videos) [1]. Between 2021 and 2022, there were approximately four billion social media users worldwide, with the largest social media networks being Facebook, Instagram, Twitter, YouTube, and TikTok [2]. With the advent of social media, information has become easier to access, leading to the creation of an “infodemic” or abundance of knowledge. If used responsibly, these platforms can assist in rapidly supplying the public with newfound information, scientific discoveries, and diagnostic and treatment protocols [3]. Furthermore, it can help compare the various approaches countries around the world are implementing to curb the spread and severity of coronavirus disease (COVID-19) [3]. However, information disseminated may not be reliable or trustworthy and has the potential to cause undue stress, particularly during public health emergencies [4].

Since COVID-19 was declared a pandemic in March 2020, social media has given health officials, governments, and civilians an open platform to share information publicly, easily, and speedily [5]. In this regard, social media platforms have created a constant and proverbial “tug-of-war” between the efforts of health officials to disseminate evidence-based scientific information to mitigate the effects of the pandemic and the unmonitored spread of misinformation by social media users [5].

Misinformation, colloquially referred to as “fake news,” is defined as false, inaccurate information that is communicated regardless of an intention to deceive [6]. Social media users, some of whom are driven by self-promotion or entertainment, may be less likely to fact-check information before sharing it [7]. Computer algorithms used by social media platforms tend to provide content that is tailored for like-minded individuals, which may reinforce their radical ideology [6]. In turn, this phenomenon may lead to conspiracy theories and a lack of trust in health officials, healthcare workers, and healthcare mandates [8]. Additionally, this excessive exposure to sensationalized social and other media reporting of disasters may be associated with poorer population mental health outcomes [9,10].

As more information circulated regarding COVID-19, many social media users took to their respective platforms to share a myriad of conspiracy theories [11]. These conspiracies can greatly impact an individual’s behavior and undermine the overall efficacy of a government’s implemented regulations regarding COVID-19 [12]. One such theory was related to the concurrence of the COVID-19 pandemic and the release of the wireless network [11]. Users postulated that the 5G network was the culprit behind the rampant spread of COVID-19 [11]. The spread of certain conspiracy theories including rumors of ingesting chloroquine, cow urine, or hot water as possible cures [13,14]. There even have been cases involving ibuprofen or other anti-inflammatory drugs because of the erroneous idea that they could increase the chances of getting infected with COVID-19 [15,16]. Ultimately, these conspiracy theories and misinformation can lead to erroneous beliefs and attitudes about the pandemic [13,14]. As a result, preventative measures and recommendations given by public health officials to halt the spread of the severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2) virus, including the use of masks and vaccinations, are disregarded [8]. Questioning the effectiveness of masks and the vaccine, along with a disregard for social distance mandates, may lead to life-threatening attitudes about the virus [13]. Furthermore, this may lead to overall doubt about the healthcare system as a whole [13].

Public figures, celebrities, and social influencers have also influenced how the public receives and interprets information by popularizing both conspiracy theories as well as efforts to halt the pandemic, including amplifying current healthcare guidelines and pushing the narrative to “flatten the curve” [17]. To further combat misinformation and discredit myths about COVID-19, the World Health Organization (WHO) has taken to social media to release shareable informational graphics [18,19]. Nevertheless, the dissemination of misinformation on social media about the COVID-19 pandemic may hinder efforts to slow down or stop the spread of the virus [17]. Stopping this pandemic with increased public health measures and social media panic, fueled by misinformation and disinformation, will be of the utmost importance in the fight against COVID-19 [20]. The purpose of this study was to identify misinformation or disinformation spread worldwide through various sources of social media and examine potential strategies for addressing this phenomenon.

A computerized search was performed to identify the sources and impact of COVID-19 misinformation on social media. The databases PubMed, Embase, and Web of Science were utilized with search terms related to the COVID-19 pandemic, social media, and misinformation.

Search strategy

Inclusion and exclusion criteria were established prior to performing the review. Articles were included if they (1) were in the English language, (2) were published in 2020, and (3) included an abstract containing the keywords COVID-19, misinformation, disinformation, and social media. Book reviews, correspondence, editorials, podcasts, radio and television segments, newspaper articles, print media, letters, notes, conference abstracts, short surveys, erratum, conference papers, and book chapters were excluded.

Identification of studies

An electronic search of PubMed, Embase, and Web of Science was performed to identify misinformation spread of COVID-19 on social media. An initial Boolean and keyword search that included “COVID 19” OR “COVID-19” OR “coronavirus” OR “SARS-CoV-2” AND “misinform*” OR “disinform*” OR “false” OR “rumor” OR “inaccuracy” AND “social media” OR “Twitter” OR “Facebook” OR “website” OR “Instagram” OR “Snapchat” OR “TikTok” OR “WhatsApp” was conducted.

Data extraction

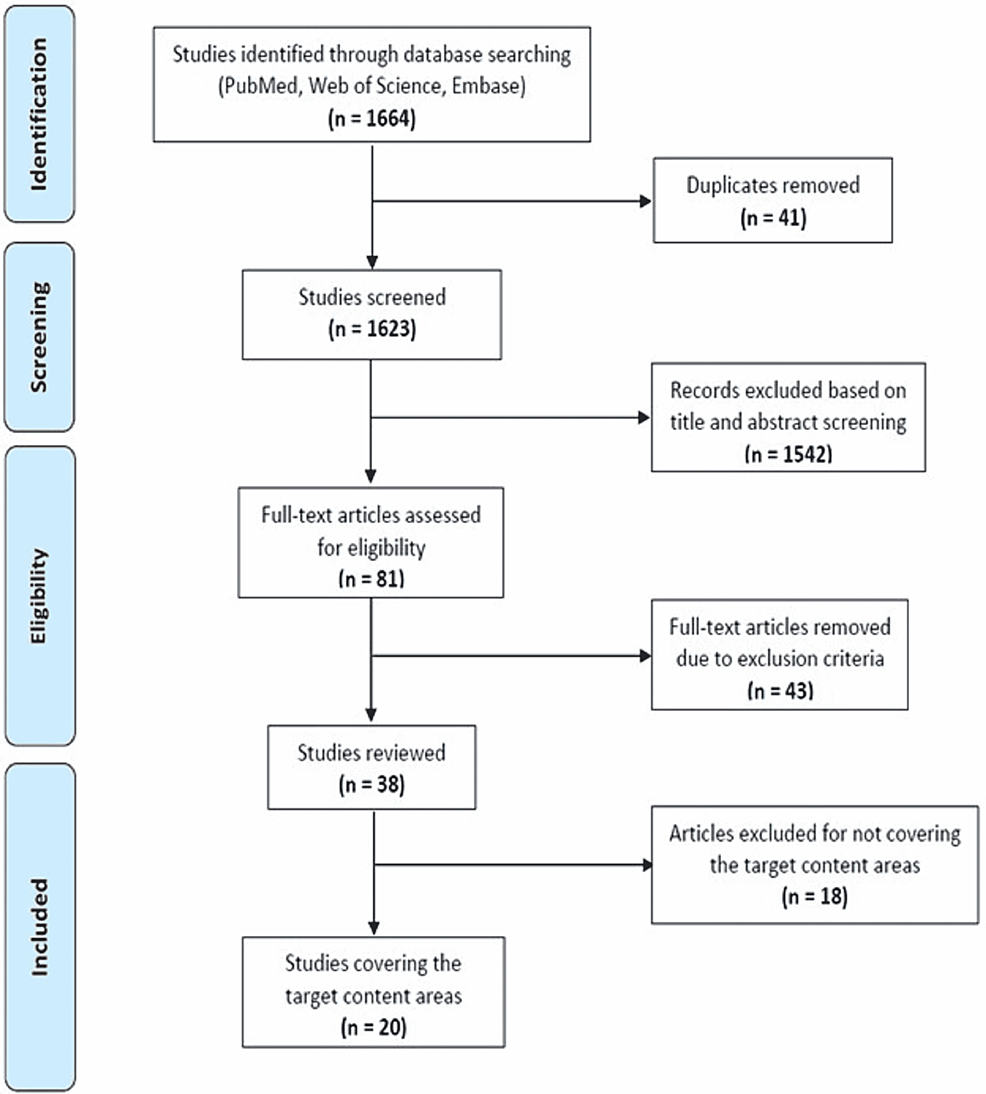

The Boolean and keyword search resulted in 1,664 articles, and 41 duplicates were removed. Of the 1,623 articles that remained, 1,542 articles were removed due to either (1) the abstract not including all keywords (COVID-19, misinformation, disinformation, and social media) or (2) being a book review, correspondence, editorial, podcast, radio, television, newspaper, print media, letter, note, conference abstract, short survey, erratum, conference paper, or chapter. Additionally, any article not written in English or not published in 2020 was removed. The remaining 81 full-text articles were assessed by two readers based on the inclusion and exclusion criteria, and a total of 38 articles were found to be eligible. Upon further screening, 18 did not meet our criteria for the content we set out to assess, leaving 20 articles for this review (see Figure 1).

Results

The search yielded a total of 1,664 articles using the predetermined search criteria. After 41 duplicates were removed, book reviews, correspondence, editorials, podcasts, radio, TV, newspaper, print media, letters, notes, conference abstracts, short surveys, erratum, conference papers, or chapters were excluded. An additional 43 articles were deemed unsuitable for inclusion as they were not published in English, published in 2020, or their abstract did not contain the keywords “COVID-19,” “misinformation/disinformation,” and “social media.” A final 17 articles were removed because their content could not be extrapolated to the general populace, resulting in a total of 20 articles for the final analysis.

Table 1 reports the characteristics of the studies included in the review, which included eight cross-sectional studies, five qualitative content analyses, four discussion articles, one descriptive analysis of quantitative data, one narrative analysis, and one systematic literature review. These studies were generated from Bangladesh (n = 1), Ukraine (n = 1), United Kingdom (n = 2), United States (n = 3), Zimbabwe (n = 1), globally in which multiple languages were spoken (n = 5), and globally in which only English was spoken (n = 7).

The following three major themes emerged from the articles selected for this review: (1) sources of COVID-19 misinformation on social media, (2) impact of COVID-19 misinformation on social media, and (3) strategies to limit COVID-19 misinformation on social media.

Sources of Misinformation

Among the 20 articles, six focused on describing the source of misinformation. The most common social media platforms used included Twitter, YouTube, and Facebook, as well as websites dedicated to spreading COVID-19 misinformation. One article provided a global analysis of the rumors, stigma, and conspiracy theories across 25 languages from 87 countries, where 82% of 2,311 reports acquired from Twitter, Facebook, and online newspaper claims were false [4]. Two cross-sectional studies measured the quality and accuracy of the information about COVID-19 on YouTube [14,21]. On YouTube, 25% of the most viewed English videos involved non-factual or misleading information, and fewer than 4% of videos provided high-quality content on reducing COVID-19 transmission, specifically in dental practices [21].

Three studies conducted surveys to analyze factors contributing to the spread of misinformation on social media [6,22,23]. One study revealed that people driven by self-promotion and entertainment and those suffering from deficient self-regulation are more likely to share unsupported information [6]. A strong negative relationship was found between holding one or more conspiracy beliefs and following all health-protective behaviors. Among social media platforms, YouTube had the strongest association with conspiracy beliefs, followed by Facebook [22]. Individuals more aligned with liberal policy and less focused on social dominance were less likely to spread conspiracy-themed misinformation on social media as opposed to groups more oriented to social dominance who shared more beliefs on conspiracy theories and less on the severity and spread of COVID-19 [23]. The fight against misinformation should take place on the platform where it arises. It is important to analyze the context of fake news and why it is spreading [23]. Other sources of misinformation included messaging services such as WhatsApp and printed news articles. Rumors were rapidly spread through mobile instant messages, which required a micro-level approach against misinformation as opposed to a macro-level targeting legacy media (television and radio broadcasters, newspapers, and magazines) [24,25].

Impact of Misinformation

Three articles described the impact of misinformation, and two studies demonstrated the repercussions of healthcare advice and stigma [8,26]. Individuals who were highly engaged in social media platforms and believed in conspiracy theories were less likely to wear masks even with health officials recommending it [8]. With increased false claims on vaccination safety on social media, vaccine hesitancy also increased in those who believed conspiracy theories. It was found that 14.8% of the US population who participated in a study on stigma communication about COVID-19 on Twitter in the early stage of the outbreak believed that the pharmaceutical industry created the virus and 28.3% believed the Chinese government created the virus as a bioweapon [26]. There was a positive relationship between conservative and social media and belief in conspiracy theories, whereas mainstream print had a negative relationship with conspiracy theories as did income, white racial identity, and education [8,26].

With regard to vaccination, the perceived threat to the participant and the United States contributed to the association between vaccine intention and conspiracy beliefs, but the belief that the mumps, measles, and rubella (MMR) vaccine is harmful was a strong contributor to vaccine hesitancy [8]. Vaccine intention was directly affected by conspiracy beliefs and indirectly by MMR perception of harm [8]. There was a positive relationship between reliance on mainstream television and vaccination, as well as reliance on social media and perception of MMR harm [8]. Analysis on Twitter aimed to identify the relationship between the presence of misinformation and conspiracy theories in COVID-19-related tweets with the stigmatization of the Asian population. From 155,353 unique COVID-19-related tweets posted between December 31, 2019, and March 13, 2020, more than half of the tweets fell in the classification of peril, which the researchers defined as information that links the stigmatized individual to threats such as physical or social danger [26]. Peril alone does not represent stigma; however, when complemented with other classifications, it indicated stigmatization of the Asian population in relationship to COVID-19. In association with the Asian population, the conspiracy theories labeled COVID-19 as “Wuhan/Chinese Virus” [26].

Strategies to Limit Misinformation

More than half of the articles (11 of 20) examined strategies that could be employed to limit the spread of misinformation. There was discordance between studies regarding whose responsibility it was to combat misinformation spread throughout social media. Two studies found an individual with a sizable social media following to have an impact on denouncing misinformation [11,17]. Other studies concluded those who had a professional responsibility to combat misinformation should take ownership, namely, internal health agencies, scientists, health information professionals, and journalists [4,8,13]. Another study posited that support by conservative parties in these professions could confront conspiracy beliefs surrounding COVID-19 [8].

Four of the eleven articles analyzed how to intervene in misinformation. A study summarizing the World Health Organization Technical Consultation suggested that interventions should be based on science and evidence [27]. A content analysis of twitter hashtags proposed that interventions involving multiple social media platforms are essential to disseminate reliable and vetted information [28]. Ahmed et al., on the other hand, proposed the fight against misinformation should take place on the platform that it arises on [11]. When misinformation was identified, taking down accounts set up to spread misinformation was beneficial and tagging misinformation as going against public health officials provided heightened transparency compared to tagging misinformation as harmful [11,29]. Based on the wide variety of findings, a multifaceted approach should be investigated.

Some studies in the review explored the topic of actionable items that could be imposed on the public to limit the spread of misinformation. One such suggestion was to implement a standard for validating professional status that could be displayed on social media to relay to the public the credibility of the information being presented [30]. Research has shown that an “accuracy nudge” intervention, which involved participants in assessing the accuracy of the news headline, was an effective way of preventing users on social media from spreading misinformation [31]. Both interventions demonstrated the utility of imposing additional scrutiny on the public’s consumption of information.

More than one-fourth (four of 11) of the articles discussed preventative measures that could be taken to prevent misinformation from arising. For instance, a study in Zimbabwe found citizens were most likely to trust an international organization; therefore, the National Association of County and City Health Officials encouraged local health departments to use social media to effectively disseminate evidence-based information to their local constituents [32,33]. Two studies encouraged strategic partnerships to be formed across all sectors to strengthen the analysis and amplification of science-based information [13,27]. Two studies encouraged translating this information into actionable behavior-change messages that should be presented in a culturally specific and community-targeted way to disseminate information to the public [27,32]. All these articles highlight the importance of proactive behavior in preventing the distribution of misinformation.

Discussion

The purpose of this study was to identify the sources and impact of COVID-19 misinformation on social media and examine potential strategies for limiting the spread of misinformation. From this review, three themes emerged: sources of misinformation, the impact of misinformation, and strategies to limit misinformation regarding COVID-19 on social media.

While the COVID-19 pandemic has highlighted numerous vulnerabilities in our public health system and social infrastructure, it is likely that issues of misinformation will be confronted in future public health crises. This study utilized a scoping review methodology to serve as a basis for formulating interventions for combatting COVID-19 misinformation on social media and preventing misinformation in the future.

Sources of misinformation discussed in the articles included Twitter, YouTube, Facebook, and websites dedicated to the dissemination of COVID-19 misinformation, along with mobile instant messaging and others [4,14,21]. Some studies described different sources of misinformation by identifying components such as percentages of both false and factual reports, number of views per source (individual accounts or entire platforms), and political policy alignment [8,26]. Other studies analyzed different forms of misinformation, such as rumors, stigmas, and conspiracy theories, along with factors and underlying context that led to their spread [8,26]. Each with their unique approach, all 20 articles demonstrated the complexity of identifying and analyzing different sources of misinformation related to COVID-19 in today’s vast social media realm. With an ever-growing number of social media platforms, misinformation has more potential than ever to be accessed by a wide range of users. Identifying such sources of misinformation would be a critical step in battling future public health threats.

With countless routes of spread, misinformation about COVID-19 has impacted public health efforts [6,8,26,34]. Dissemination of misinformation and conspiracy theories about COVID-19 via social media was found to be associated with a decrease in perceived pandemic threat, mask-wearing, and trust in COVID-19 vaccines [8]. Individuals responsible for sharing misinformation on social media displayed higher rates of addiction to social media, fatigue from accessing social media, and reluctance to follow COVID-19 public health recommendations [6]. Similarly, believers of COVID-19-related conspiracy theories were less likely to participate in preventative measures, including mask-wearing and vaccination against COVID-19 [8]. Beyond the effects of misinformation on individuals and public health efforts, studies demonstrated an increase in the stigmatization of Chinese individuals associated with social media postings blaming them for the pandemic or referring to COVID-19 as the “Wuhan or Chinese virus” [26]. Misinformation is certainly not novel, but social media has catapulted it to new heights. If proper measures are not taken, it will continue to impede future public health efforts and cause detrimental effects on the infrastructure of healthcare and society [34].

Many of the studies in the review investigated various strategies that may be used to limit the spread of misinformation. Among these, a variety attempted to identify whose responsibility it should be to combat misinformation. Some reports suggested those with professional obligations, such as health professionals, scientists, and local health departments, should take a leading role in combating misinformation [34]. Other sources demonstrated that public figures with a large following had a significant impact on denouncing misinformation [34]. Another common consideration of how misinformation was received largely depended on how it was tagged (i.e., as harmful vs. going against public health officials) [11,28,29,30]. By collectively investigating different components, this scoping review has highlighted potential strategies for limiting the spread of misinformation related to COVID-19 [34]. Considering these different components, such as who should combat misinformation and methods to do so, will aid in curtailing the spread of misinformation in the future when encountering future pandemics or health threats [11,28,29,30].

Various approaches were used to identify, describe, and analyze misinformation on social media related to COVID-19. The many types of data collected across the studies were likely a result of the underlying complexity of social media and the different forms it can take. Despite having different study and analysis designs, the included articles contributed to overarching themes.

Limitations of included studies

With a topic as evolving as COVID-19 in 2020, the resulting articles in this review had their limitations in accurately representing the presently available data regarding misinformation on social media related to the virus. When considering a global pandemic, language restrictions (most commonly, English-only studies) may limit the generalizability of such studies to the public as semantics and context may get lost in translation. Another limitation in several of the study designs was the exclusion of potentially relevant data, while other limitations included small sample sizes and specific identifiers such as politics and religion. Additionally, the limit in search time frames, namely, cross-sectional studies, during a rapidly changing pandemic may have led to missed topics and important messages. Lastly, data from the included studies were subject to biases due to misclassification of qualitative data, self-reported data, the tendency of younger populations to use social media, and confounding variables such as media literacy or cognitive sophistication.

Limitations of the review process

First, there was a relatively narrow timeframe used to search for articles. As the course of the COVID-19 pandemic has undulated with the emergence of new strains, a search for articles within a distinct timeframe may not accurately represent the course of the COVID-19 pandemic, which is rapidly changing. Additionally, this review only included articles written in English, which may not be representative of the global pandemic. Lastly, relevant articles may have been excluded due to strict inclusion and exclusion criteria.

Considerations for future research

More research is needed to investigate methods used to combat misinformation to compare their efficacy and/or compare sources of education aimed to combat misinformation. Studies should also examine the role of misinformation in other public health crises, such as the Ebola virus epidemic or the anti-vaccination movement. Paralleling these findings with the results of this scoping review may reveal useful strategies for addressing misinformation regarding future public health crises.