The epic battle against coronavirus misinformation and conspiracy theories

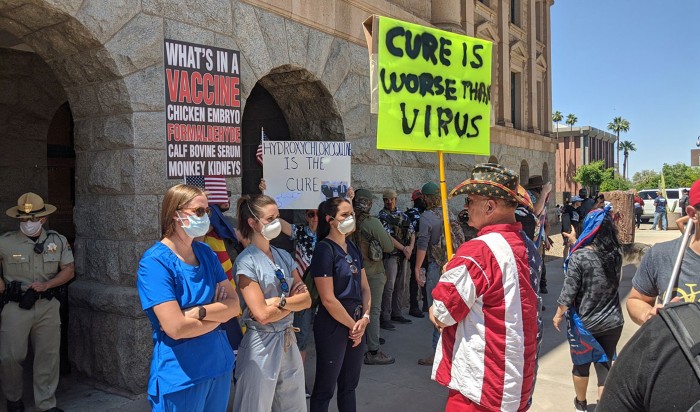

Protesters rallying in Arizona against lockdowns held up signs carrying anti-vaccine messages and promoting unproven treatments. Credit: Adam Waltz/ABC15 Arizona

In the first few months of 2020, wild conspiracy theories about Bill Gates and the new coronavirus began sprouting online. Gates, the Microsoft co-founder and billionaire philanthropist who has funded efforts to control the virus with treatments, vaccines and technology, had himself created the virus, argued one theory. He had patented it, said another. He’d use vaccines to control people, declared a third. The false claims quietly proliferated among groups predisposed to spread the message — people opposed to vaccines, globalization or the privacy infringements enabled by technology. Then one went mainstream.

On 19 March, the website Biohackinfo.com falsely claimed that Gates planned to use a coronavirus vaccine as a ploy to monitor people through an injected microchip or quantum-dot spy software. Two days later, traffic started flowing to a YouTube video on the idea. It’s been viewed nearly two million times. The idea reached Roger Stone — a former adviser to US President Donald Trump — who in April discussed the theory on a radio show, adding that he’d never trust a coronavirus vaccine that Gates had funded. The interview was covered by the newspaper the New York Post, which didn’t debunk the notion. Then that article was liked, shared or commented on by nearly one million people on Facebook. “That’s better performance than most mainstream media news stories,” says Joan Donovan, a sociologist at Harvard University in Cambridge, Massachusetts.

Donovan charts the path of this piece of disinformation like an epidemiologist tracking the transmission of a new virus. As with epidemics, there are ‘superspreader’ moments. After the New York Post story went live, several high-profile figures with nearly one million Facebook followers each posted their own alarming comments, as if the story about Gates devising vaccines to track people were true.

The Gates conspiracy theories are part of an ocean of misinformation on COVID-19 that is spreading online. Every major news event comes drenched in rumours and propaganda. But COVID-19 is “the perfect storm for the diffusion of false rumour and fake news”, says data scientist Walter Quattrociocchi at the Ca’Foscari University of Venice, Italy. People are spending more time at home, and searching online for answers to an uncertain and rapidly changing situation. “The topic is polarizing, scary, captivating. And it’s really easy for everyone to get information that is consistent with their system of belief,” Quattrociocchi says. The World Health Organization (WHO) has called the situation an infodemic: “An over-abundance of information — some accurate and some not — rendering it difficult to find trustworthy sources of information and reliable guidance.”

For researchers who track how information spreads, COVID-19 is an experimental subject like no other. “This is an opportunity to see how the whole world pays attention to a topic,” says Renée diResta at the Stanford Internet Observatory in California. She and many others have been scrambling to track and analyse the disparate falsehoods floating around — both ‘misinformation’, which is wrong but not deliberately misleading, and ‘disinformation’, which refers to organized falsehoods that are intended to deceive. In a global health crisis, inaccurate information doesn’t only mislead, but could be a matter of life and death if people start taking unproven drugs, ignoring public-health advice, or refusing a coronavirus vaccine if one becomes available.

By studying the sources and spread of false information about COVID-19, researchers hope to understand where such information comes from, how it grows and — they hope — how to elevate facts over falsehood. It’s a battle that can’t be won completely, researchers agree — it’s not possible to stop people from spreading ill-founded rumours. But in the language of epidemiology, the hope is to come up with effective strategies to ‘flatten the curve’ of the infodemic, so that bad information can’t spread as far and as fast.

No filter

Researchers have been monitoring the flow of information online for years, and have a good sense of how unreliable rumours start and spread. Over the past 15 years, technology and shifting societal norms have removed many of the filters that were once placed on information, says Amil Khan, director of the communications agency Valent Projects in London, who has worked on analysing misinformation for the UK government. Rumour-mongers who might once have been isolated in their local communities can connect with like-minded sceptics anywhere in the world. The social-media platforms they use are run to maximize user engagement, rather than to favour evidence-based information. As these platforms have exploded in popularity over the past decade and a half, so political partisanship and voices that distrust authority have grown too.

To chart the current infodemic, data scientists and communications researchers are now analysing millions of messages on social media. A team led by Emilio Ferrara, a data scientist at the University of Southern California in Los Angeles, has released a data set of more than 120 million tweets on the coronavirus1. Theoretical physicist Manlio De Domenico at the Bruno Kessler Institute, a research institute for artificial intelligence in Trento, Italy, has set up what he calls a COVID-19 “infodemic observatory”, using automated software to watch 4.7 million tweets on COVID-19 streaming past every day. (The actual figure is higher, but that is as many as Twitter will allow the team to track.) De Domenico and his team evaluate the tweets’ emotional content and, where possible, the region they were sent from. They then estimate their reliability by looking at the sources to which a message links. (Like many data scientists, they rely on the work of fact-checking journalists to distinguish reliable news sources or claims from unreliable ones.) Similarly, in March, Quattrociocchi and his co-workers reported2 a data set of around 1.3 million posts and 7.5 million comments on COVID-19 from several social-media platforms, including Reddit, WhatsApp, Instagram and Gab (known for its right-wing audience), from 1 January to mid-February.

A study in 2018 suggested that false news generally travels faster than reliable news on Twitter3. But that isn’t necessarily the case in this pandemic, says Quattrociocchi. His team followed some examples of false and true COVID-19 news — as classified by fact-checker sites — and found that reliable posts saw as many reactions as unreliable posts on Twitter2. The analysis is preliminary and hasn’t yet been peer reviewed.

Ferrara says that in the millions of tweets about the coronavirus in January, misinformation didn’t dominate the discussion. Much of the confusion at the start of the pandemic related to fundamental scientific uncertainties about the outbreak. Key features of the virus — its transmissibility, for instance, and its case-fatality rate — could be estimated only with large error margins. Where expert scientists were honest about this, says biologist Carl Bergstrom at the University of Washington in Seattle, it created an “uncertainty vacuum” that allowed superficially reputable sources to jump in without real expertise. These included academics with meagre credentials for pronouncing on epidemiology, he says, or analysts who were good at crunching numbers but lacked a deep understanding of the underlying science.

Politics and scams

As the pandemic shifted to the United States and Europe, false information increased, says Donovan. A sizeable part of the problem has been political. A briefing prepared for the European Parliament in April alleged that Russia and China are “driving parallel information campaigns, conveying the overall message that democratic state actors are failing and that European citizens cannot trust their health systems, whereas their authoritarian systems can save the world.” The messages of US President Donald Trump and his administration are sowing their own political chaos. This includes Trump’s insistence on referring to the ‘Chinese’ or ‘Wuhan’ coronavirus and his advocacy of unproven (and even hazardous) ‘cures’, and the allegation by US Secretary of State Mike Pompeo that the virus originated in a laboratory, despite the lack of evidence.

There are organized scams, too. More than 68,000 website domains have been registered this year with keywords associated with the coronavirus, says Donovan. She’s reviewed ones that sell fake treatments for COVID-19, and others that collect personal information. Google’s search-engine algorithms rank information from the WHO and other public-health agencies higher than that from other sources, but rankings vary depending on what terms a person enters in a search. Some scam sites have managed to come out ahead by using a combination of keywords optimized and targeted to a particular audience, such as newly unemployed people, Donovan says.

Spreading agendas

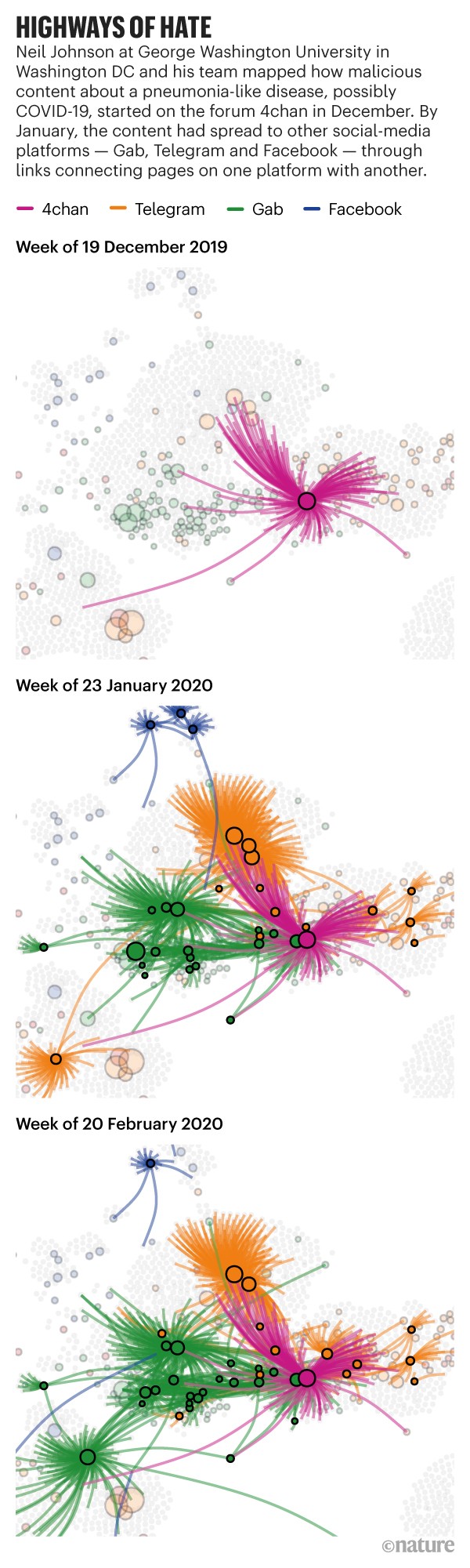

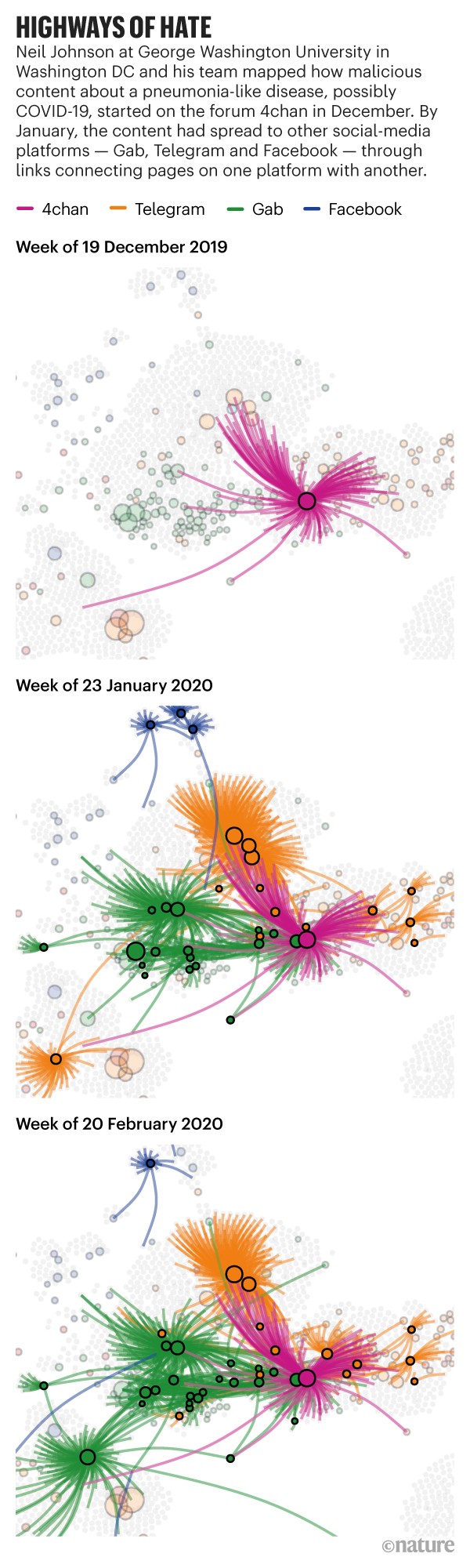

Many of the falsehoods online don’t have obvious sources or intentions. Rather, they often begin with niche groups mobilizing around their favoured agendas. Neil Johnson, a physicist at George Washington University in Washington DC, has reported4 COVID‑19 misinformation narratives taking shape among online communities of extremist and far-right ‘hate’ groups, which occupy largely unregulated platforms including VKontakte, Gab and 4Chan, as well as mainstream ones such as Facebook and Instagram.

The study says that a “hate multiverse” is exploiting the COVID-19 pandemic to spread racism and other malicious agendas, focusing an initially rather diverse and incoherent set of messages into a few dominant narratives, such as blaming Jews and immigrants for starting or spreading the virus, or asserting that it is a weapon being used by the “Deep State” to control population growth (see ‘Highways of hate’).

An alarming feature of this network is its capacity to draw in outside users through what Johnson and his team call “wormhole” links. These are shortcuts from a network engaged with quite different issues. The hate multiverse, the researchers say, “acts like a global funnel that can suck individuals from a mainstream cluster on a platform that invests significant resources in moderation, into less moderated platforms like 4Chan or Telegram”. As a result, Johnson says, racist views are starting to appear in the anti-vaccine communities, too. “The rise of fear and misinformation around COVID-19 has allowed promoters of malicious matter and hate to engage with mainstream audiences around a common topic of interest, and potentially push them toward hateful views,” his team says in the paper.

Donovan has seen odd bedfellows emerge in the trolling of the WHO’s director-general, Tedros Adhanom Ghebreyesus. US-based groups that often post white-nationalist content are circulating racist cartoons of him that are similar to those posted by activists in Taiwan and Hong Kong. The latter groups have long criticized the WHO as colluding with the Chinese Communist Party, because the WHO, like all United Nations agencies, considers the regions as part of mainland China. “We’re seeing some unusual alliances coming together,” Donovan says.

Dangerous spread

As misinformation grows, it sometimes becomes deadly. On Twitter in early March, technology entrepreneurs and investors shared a document prematurely extolling the benefits of chloroquine, an old malaria drug, as an antiviral against COVID‑19. The document, which claimed that the drug had produced favourable outcomes in China and South Korea, was widely passed around even before the results of a small, non-randomized French trial of the related drug hydroxychloroquine5 were posted online on 17 March. The next day, Fox News aired a segment with one of the authors of the original document. And the following day, Trump called the drugs “very powerful” at a press briefing, despite the lack of evidence. There were small spikes in Google searches for hydroxychloroquine, chloroquine and their key ingredient, quinine, in mid-March — with the largest surge on the day of Trump’s remarks, Donovan found using Google Trends. “Just like toilet paper, masks and hand sanitizer, if there was a product to be had, it would have sold out,” she says. Indeed, it did in some places, worrying people who need the drugs to treat conditions such as lupus. Hospitals have reported poisonings in people who experienced toxic side effects from pills containing chloroquine, and such a large number of people with COVID-19 have been asking for the drug that it has derailed clinical trials of other treatments.

Fox News has been particularly scrutinized for its part in amplifying dangerous misinformation. In a phone survey of 1,000 randomly chosen Americans in early March6, communication researchers found that respondents who tended to get their information from mainstream broadcast and print media had more accurate ideas about the disease’s lethality and how to protect themselves from infection than did those who got their news mostly from conservative media (such as Fox News and Rush Limbaugh’s radio show) or from social media. That held true even after factors such as political affiliation, gender, age and education were controlled for.

Those results echo another study, as yet not peer reviewed, in which economists at the University of Chicago in Illinois tried to analyse the effects of two Fox News presenters on viewers’ opinions during February, as the coronavirus began to spread beyond China. One presenter, Sean Hannity, downplayed the coronavirus’s risk and accused Democrats of using it as a weapon to undermine the president; the other, Tucker Carlson, reported that the disease was serious. The study found that areas of the country where more viewers watched Hannity saw more cases and deaths than did those where more watched Carlson — a divergence that disappeared when Hannity adjusted his position to take the pandemic more seriously.

De Domenico says he is encouraged that, as the crisis has deepened, so has many people’s determination to find more reliable information. “When COVID-19 started to hit each country, we have observed dramatic changes of attitude,” he says. “People started to consume and share more reliable news from trusted sources.” Of course, the goal is to have people listening to the best available advice on risk before they watch people die around them, Donovan says.

Flattening the curve

In March, Brazilian President Jair Bolsonaro began to spread misinformation on social media — posting a video that falsely said hydroxychloroquine was an effective treatment for COVID-19 — but was stopped in his tracks. Twitter, Facebook and YouTube took the unprecedented step of deleting posts from a head of state, on the grounds that they could cause harm.

Social-media platforms have stepped up their efforts to flag or remove misinformation and to guide people to reliable sources. In mid-March, Facebook, Google, LinkedIn, Microsoft, Reddit, Twitter and YouTube issued a joint statement saying that they were working together on “combating fraud and misinformation about the virus”. Facebook and Google have banned advertisements for ‘miracle cures’ or overpriced face masks, for example. YouTube is promoting ‘verified’ information videos about the coronavirus.

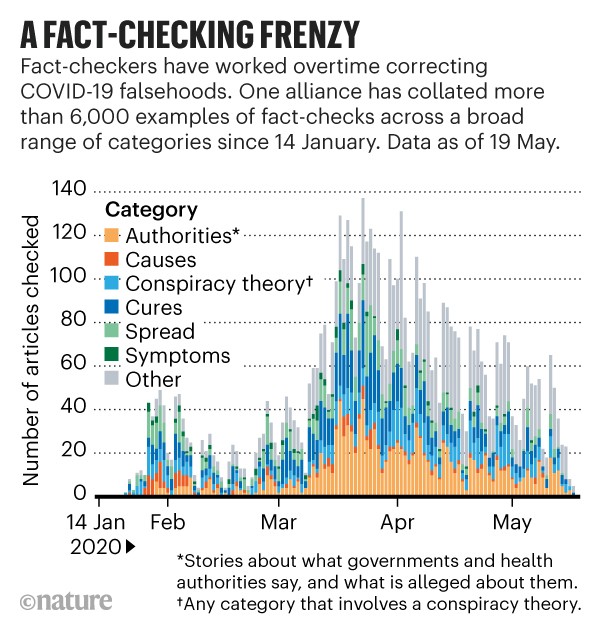

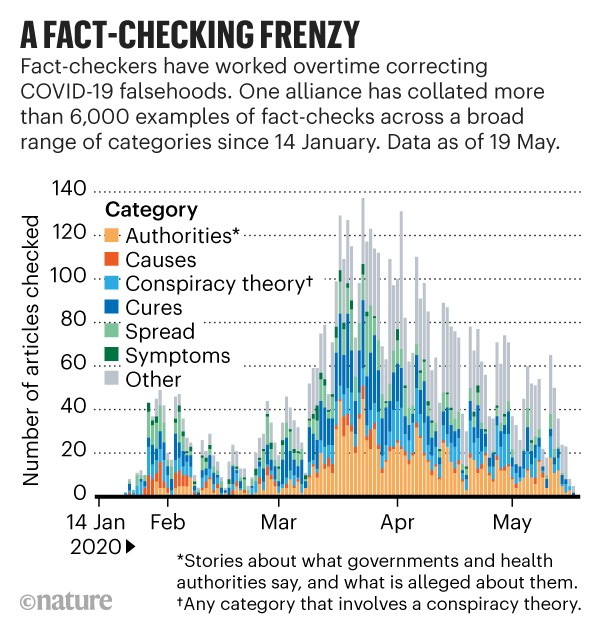

Social-media platforms often rely on fact-checkers at independent media organizations to flag up misleading content. In January, 88 media organizations around the world joined together to record their fact-checks of COVID-19 claims in a database maintained by the International Fact-checking Network (IFCN), part of the Poynter Institute for Media Studies in St Petersburg, Florida (see ‘A fact-checking frenzy’). The database currently holds more than 6,000 examples, and the IFCN is now inviting academics to dig into the data. (Another site, Google’s fact-check explorer, records more than 2,700 fact-checks about COVID-19.) But some fact-checking organizations, such as Snopes, have admitted to being overwhelmed by the quantity of information they are having to deal with. “The problem with infodemics is its huge scale: collectively, we are producing much more information than what we can really parse and consume,” says De Domenico. “Even having thousands of professional fact-checkers might not be enough.”

Communication scholar Scott Brennen at the Oxford Internet Institute, UK, and his co-workers have found that social-media companies have done a decent job of removing misleading posts, given the hard task. The team followed up 225 pieces of misinformation about the coronavirus that independent fact-checkers had collated in the IFCN or Google databases as false or misleading. In a 7 April report, the team found that by the end of March, only some 25% of these false claims remained in place without warning labels on YouTube and Facebook, although on Twitter that proportion was 59% (see go.nature.com/2tvhuj5). And Ferrara says that about 5% of the 11 million Twitter users his team has studied so far in its COVID-19 database have been shut down for violating the platform’s policies of use, and that these tended to be unusually active accounts.

But some creators of content have found ways to delay detection by social-media moderators, Donovan notes, in what she calls “hidden virality”. One way is to post content in private groups on Facebook. Because the platform relies largely on its users to flag up bad information, shares of misleading posts in private communities are flagged less often because everyone in the group tends to agree with one another, she says. Donovan used to study white supremacy online, and says a lot of ‘alt-right’ content wasn’t flagged until it leaked into public Facebook domains. Using CrowdTangle, a social-media-tracking tool owned by Facebook, Donovan found that more than 90% of the million or so interactions referring to the New York Post article about the Gates vaccine conspiracy were on private pages.

Another way in which manipulators slip past moderation is by sharing the same post from a new location online, says Donovan. For instance, when people on Facebook began sharing an article that alleged that 21 million people had died of COVID-19 in China, Facebook put a label on the article to indicate that it contained dubious information, and limited its ranking so that it wasn’t prioritized in a search (China has confirmed many fewer deaths: 4,638). Immediately, however, people began posting a copy of the article that had been stored on the Internet Archive, a website that preserves content. This copy was shared 118,000 times before Facebook placed a warning on the link. Another post, on the website Medium, was removed by Medium because it falsely claimed that all biomedical information known about COVID-19 was wrong, and put forward a dubious theory. Before it was taken down, it garnered some shares. But a version on an archived site remains. It has garnered 1.6 million interactions and 310,000 shares on Facebook — numbers that are still climbing.

Quattrociocchi says that, faced with regulation of content on platforms such as Twitter and Facebook, some misinformation simply migrates elsewhere: regulation is currently worse, he says, on Gab and WhatsApp. And there is only so much you can do to police social media: “If someone is really committed,” says Ferrara, “once you suspend them, they go back and create another account.”

Donovan agrees, but argues that social-media companies could implement stronger, faster moderation, such as finding when posts that have already been flagged, or deleted, are revived with alternative links. In addition, she says, social-media firms might need to adjust their policies on permitting political discourse when it threatens lives. She says that health misinformation is increasingly being buried in messages that seem strictly political at first glance. A Facebook group urging protests against stay-at-home restrictions — Re-Open Alabama — featured a video (viewed 868,000 times) of a doctor saying that his colleagues have determined that COVID-19 is similar to influenza, and “it shows healthy people don’t need to shelter in place anymore”. Those messages could lead people to ignore public-health guidance and endanger many others, says Donovan. But Facebook has been slow to curb these messages because they seem to be expressing political opinions. “It’s important to demonstrate to platform companies that they aren’t moderating political speech,” Donovan says. “They need to look at what kind of health misinformation backs their claims that restrictions are unjustified.” (Facebook did not reply to a request for comment.)

Donovan is trying to teach others to spot the trail of misinformation: as with a viral outbreak, it’s easier to curb the spread of misinformation if it’s spotted close to its source, when fewer people have been exposed. She has grants of more than US$1 million from funders, including the Hewlett Foundation in Menlo Park, California, and the Ford Foundation in New York City, to collect case studies of the way misinformation spreads, and to use them to teach journalists, university researchers and policymakers how to analyse data on posts and their share patterns.

Gaining trust

Efforts to raise the profile of good information, and slap a warning label on the bad, can only go so far, says DiResta. “If people think the WHO is anti-American, or Anthony Fauci is corrupt, or that Bill Gates is evil, then elevating an alternative source doesn’t do much — it just makes people think that platform is colluding with that source,” she says. “The problem isn’t a lack of facts, it’s about what sources people trust.”

Brennen agrees. “The people in conspiracy communities think that they are doing what they should: being critical consumers of media,” he says. “They think they are doing their own research, and that what the consensus might advocate is itself misinformation.”

That sentiment could grow if public-health authorities don’t inspire confidence when they change their advice from week to week — on facemasks, for example, or on immunity to COVID-19. Some researchers say the authorities could be doing a better job at explaining the evidence, or lack of it, that guided them.

For now, US polling suggests that the public still supports vaccines. But anti-vaccine protesters are making more noise. At rallies protesting against lockdowns in California in May, for instance, some protestors carried signs saying, “No Mandatory Vaccines”. Anti-vaccination online hubs are leaping on to COVID-19, says Johnson. “It’s almost like they’ve been waiting for this. It crystallizes everything they’ve been saying.”

Nature 581, 371-374 (2020)

References

- 1.

Chen, E., Lerman, K. & Ferrara, E. Preprint at https://arxiv.org/abs/2003.07372 (2020).

- 2.

Cinelli, M. et al. Preprint at https://arxiv.org/abs/2003.05004 (2020).

- 3.

Vosoughi, S., Roy, D. & Aral, S. Science 359, 1146–1151 (2018).

- 4.

Velásquez, N. et al. Preprint at https://arxiv.org/abs/2004.00673 (2020).

- 5.

Gautret, P. et al. Int. J. Antimicrob. Agents https://doi.org/10.1016/j.ijantimicag.2020.105949 (2020).

- 6.

Jamieson, K. H. & Albarracín, D. Harvard Kennedy Sch. Misinform. Rev. https://doi.org/10.37016/mr-2020-012 (2020).

*** This article has been archived for your research. The original version from Nature.com can be found here ***